You install your brand-new SQL Server using the installation wizard, and at the very end you hit the Finish button. Woohoo – now we can go into production with our server! Sorry, but that’s not really the truth, because your brand-new SQL Server is misconfigured by default!

Yes, you read that correctly: a default installation of SQL Server is misconfigured in so many different areas. In today’s blog posting I want to show you the 3 configuration options that you have to change immediately after the installation for faster performance. Let’s start!

Max Server Memory

Disclaimer: If you run your SQL Server these days on a 32 bit system, please throw away your hardware, buy a 64 bit system, install the 64 bit version of SQL Server, and continue reading here.

By now you should have a 64 bit SQL Server in front of you. 64 bit means that you can theoretically address a main memory size of 2^64 = that is 16 Exabytes (1 billion gibabytes)! Because of this huge amount of memory, computer vendors currently limit the address bus of a 64 bit system to “only” 48 bits – the whole 64 bits doesn’t really make sense. With an address space of 48 bits you can address up to 256 TB of memory – that’s still a huge amount of space.

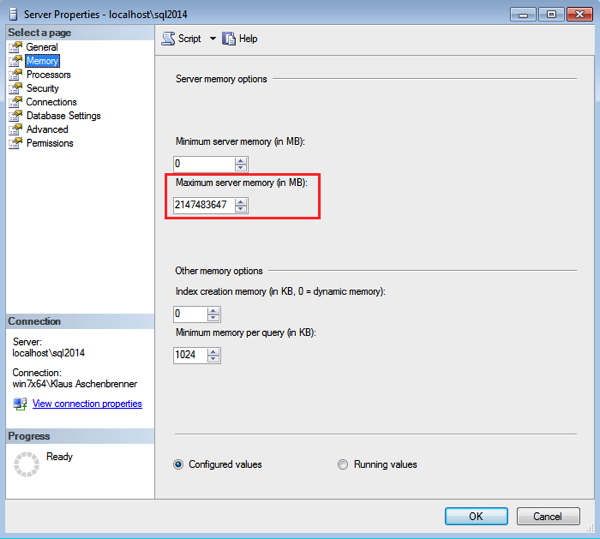

You can use the Max Server Memory configuration option to configure how much memory SQL Server can consume. The following picture shows this configuration option after a default installation of SQL Server on a 64 bit system.

As you can see from the previous picture, SQL Server is configured by default to consume up to 2147483647 MB – that is 2 Petabytes! Hmm, with an address bus of 48 bits we can physically only address up to 256 TB of memory, and now SQL Server can consume up to 2 Petabytes? Something is wrong here… The Maximum Server Memory setting is just the largest possible 32 bit integer value – 2147483647. Nothing more. So SQL Server can consume more memory that can be addressed physically? Not really a good default configuration. SQL Server can eat up your whole physical memory by default!

You should always change this configuration option, so that you also give your OS some memory to live and breathe. Normally (without any other programs/processes on the server) you should give the OS at least 10% of the physical memory. This means that you have to lower the Max Server Memory setting. With 64 GB of physical memory I would configure the Max Server Memory setting with around 56 GB, so that the OS can consume and work with the remaining 8 GB.

Cost Threshold for Parallelism

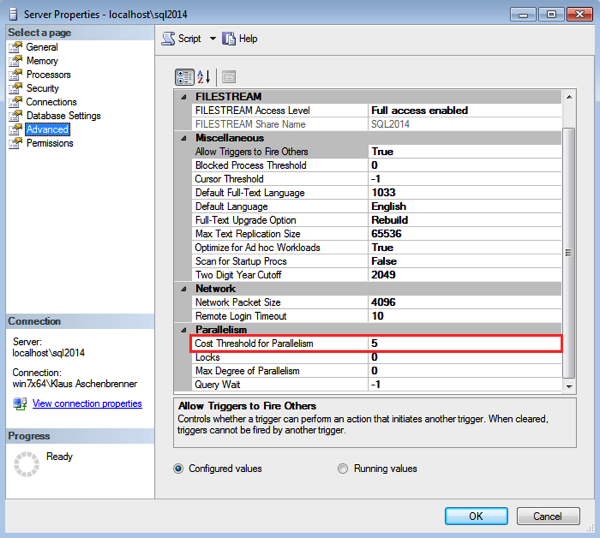

The next configuration option that you have to change has to do with how SQL Server handles parallelism. Parallelism means that SQL Server is able to run operators in an execution plan across multiple worker threads. The goal of parallelism is to improve the throughput of your queries. The first configuration option that influences parallelism in SQL Server is the so-called Cost Threshold for Parallelism:

The number that you configure here defines the query costs at which the Query Optimizer tries to find a cheaper parallel execution plan. If the found parallel plan is cheaper, then this plan is executed, otherwise the serial plan is executed. As you can see from the previous picture, a default configuration of SQL Server uses a cost threshold of 5. When the query cost of your serial plan is larger than 5, then the Query Optimizer runs the query optimization again to find a possible cheaper parallel execution plan.

Unfortunately a cost factor of 5 is a very small number these days. Therefore SQL Server tries to parallelize your execution plans too soon. But parallelism only makes sense when you deal with larger queries – like in a reporting or Data Warehousing scenario. In a pure OLTP scenario a parallel plan is an indication of a bad indexing strategy, because when you have a missing index SQL Server has to scan your complete Clustered Index (in combination with a Filter or a residual predicate), and therefore your query costs will get larger, they cross the cost threshold, and finally the Query Optimizer will give you a parallel plan. And people always get worried when they see parallel execution plans! But the root cause was just a missing Non-Clustered Index.

During my various consulting engagements and SQL Server Health Checks, I always recommend a Cost Threshold for Parallelism of at least 20 or even 50. That way you can make sure that SQL Server only tries to parallelize larger queries for you. And even if you have a parallel plan in front of you, you should think about whether you can make this query plan cheaper by adding a supporting Non-Clustered Index. And as I have already covered in a previous SQL Server Quickie, CXPACKET waits are not an indication that you have problems with parallelism in your system!

Max Degree of Parallelism (MAXDOP)

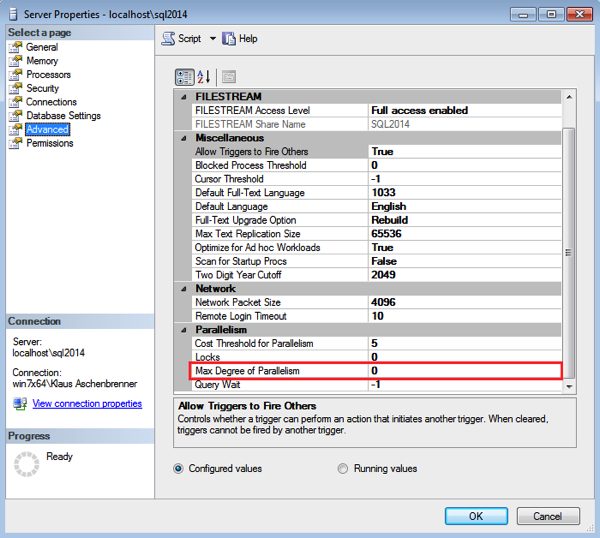

When an execution plan goes parallel in SQL Server, the Max Degree of Parallelism defines how many worker threads each parallel operator in the execution plan can use. The following picture shows the default configuration of this option.

As you can see, SQL Server uses the default value of 0. This value means that SQL Server tries to parallelize your execution plan across all CPU cores that are assigned to SQL Server (by default all cores are assigned to SQL Server!). As you might expect this setting also doesn’t make sense, especially when you have a larger system with a huge amount of CPU cores. Parallelism itself introduces overhead, and this overhead gets larger and larger as you use more and more worker threads.

A recommendation is to set the Max Degree of Parallelism to the number of cores that you have in one NUMA node. Therefore SQL Server tries to keep parallel plans in one NUMA node during query execution which also improves performance.

Sometimes you will also see suggestions to set the Max Degree of Parallelism option to 1. This is a really bad advice, because this makes your *complete* SQL Server single-threaded! And even maintenance operations (like Index Rebuilds) are executed single-threaded, and this will seriously hurt the performance! Of course there are also some “award winning” products which dictate that you use a MAXDOP of 1…

Summary

After you have installed your SQL Server, the real work for the DBA begins: you have to configure your SQL Server installation to your hardware layout. As you have seen in this blog posting, the default configuration of SQL Server is simply misconfigured by default. Therefore it is very important to change some configuration options immediately after the installation. I have already seen SQL Servers in production with the default options that I have mentioned here, because they “will be configured later”. And “later” never ever happened…

So please do yourself a favour today, and configure your SQL Server for maximum performance and throughput!

Thanks for your time,

-Klaus

29 thoughts on “The 3 Configuration Options that you always have to change in SQL Server!”

Can these options be set in a T-SQL script as well? Care to share that T-SQL with us? That would be really nice and helpful! 🙂

Hello Mark,

You can change these options through a call to sp_configure.

Thanks,

-Klaus

HI Klaus,

I posted a message on a facebook group what values should be assigned to cost Threshold for Parallelism and MAXDOP as I don’t see any guidelines or articles to determine a value. Many of the members replied back as “leave it alone” unless you have a problem. My problem is CPU running at 80% and seeing cxpackets . Thank you for your quick note on cxpackets. Is there a way to prove cost Threshold for Parallelism and MAXDOP should be changed ?

Hello,

Thanks for your comment.

As I have written in the blog posting, I would set the Cost Threshold at least on 20 or even 50.

And the MAXDOP should be set to the number of cores that you have in one NUMA node.

If your CPU usage is at 80% and you see CXPACKET waits, you will normally have problems with your indexing strategies.

Just check for expensive queries (high I/O, CPU consumption) and try to make them cheaper with additional (helpful!) Non-Clustered Indexes.

Trust me – your CPU usage and your CXPACKET waits will go down!

As I have mentioned in my SQL Server Quickie about CXPACKET waits (see http://www.SQLpassion.at/archive/2014/12/01/sql-server-quickie-20-cxpacket-waits), they don’t tell you anything, because the coordinator thread always produces CXPACKET waits.

Thanks,

-Klaus

Just want to mention that setting Max DOP to 1 is not always bad and sometimes it is a must. I have experienced “dop 1” scenario in the first hand several years ago. On the other hand, the benefit of the parallel execution is way non-significant compared with performance stability. I agree with the other two configuration recommendation though.

Hello Jeff,

Thanks for your comment.

If you want to set a MAXDOP of 1 for your workload, you can also use other features in SQL Server – like Resource Governor (Enterprise Edition only!).

The problem that I have with a MAXDOP of 1 on the instance level is that *everything* runs single-threaded, even your Index Rebuild operation that would benefit from parallelism.

Thanks,

-Klaus

Resource governor really does not solve the scenario (i.e. performance stability) where max dop needs to be 1.

This configuration setting cannot be said that it should NOT be 1. Actually, as I pointed out before, it needs to be 1 SOMETIMES. Believe it or not, I was once a “hater” of setting dop=1, but I was convinced after I tried hard to make DOP 1 and still cannot achieve my goal. In short, DOP = 1 solved my performance stability issue with almost no noticeable benefit lost.

Hello Jeff,

Thanks for your comment.

Why do you want to run your *complete* database server single-threaded?

It’s like driving with a Ferrari with the 1st gear on a highway – doesn’t really make sense.

With Resource Governor you are able to partition your workload into workload groups, and set the MAXDOP option on the workload group itself.

Thanks,

-Klaus

let say i have a server has 4 cpus and i don’t update the Max Degree of Parallelism (MAXDOP) and leave it as default 0

This means that SQL Server tries to parallelize the execution plan across all CPU , is this bad?

Hello Mahmoud,

With just 4 cores SQL Server will parallelize a parallel execution plan across the 4 cores – correct.

In that case it wouldn’t make a huge difference to the default option of MAXDOP, but we want to be as explicit as possible!

Imagine your SQL Server is virtualized (ouch…), and your VMware admin (what is HyperV?) assigns at one day more cores to your VM…

Thanks,

-Klaus

Nice job. That last one is still fairly new (at least to me) so I’m particularly glad you mentioned it. That and NOT using MAXDOP 1 :).

Thanks for your great feedback, Kenneth!

Highly appreciated! 🙂

-Klaus

Klaus – what about configuring the number of TempDB files? Important to do so right away or should it be a more metric driven approach.

Good stuff, love receiving your newsletter

Bill

Hello Bill,

Thanks for your comment.

Yes, you are absolutely right – TempDb is also misconfigured by default!

I just had to make sure to use the 3 most prominent configuration changes – otherwise I would have written a whole book instead of a blog posting 😉

There is also a SQL Server Quickie where I have talked about TempDb configuration: http://www.SQLpassion.at/archive/2013/07/23/sql-server-quickie-7-tempdb-latch-contention/

Thanks,

-Klaus

SharePoint recommends setting the MAXDOP to 1 for the whole SQL server, as well as turning off auto update statistics, which might both sound bad. But the application itself manages statistics, and keeping each individual query single threaded when there may be hundreds or thousands of concurrent queries coming from multiple web front ends is actually a good scaling technique for that workload. As with all things SQL, sometimes the answer is more complex than “never do this”

That doesn’t mean every SharePoint farm should do so. But, that particular advice comes from testing for a specific workload, aimed at the (utterly awful and poorly indexed) DB SP is using, and I would recommend testing your workload with all their settings as recommended as a bsseline, then changing them one at a time to benchmark the effect

Hello Marc,

Thanks for your comment. The problem is that SharePoint requires a MAXDOP of 1 at the instance level. People normally have additional database on an instance – where the instance is not dedicated to SharePoint. So it also influences other databases. And what you do about your Index Rebuilds? They are also running single-threaded…

It would be much better to recommend Resource Governor to separate the SharePoint workload, and only apply a MAXDOP of 1 to that specific workload.

Thanks,

-Klaus

We obviously work on different sized SharePoint instances!

I agree with you, if other things are on there. But if you’re at the size that you really need these settings, you absolutely should not have anything else running on SPs DB server, and in fact often there will be two SQL servers dedicated to SharePoint, one for the search DBs, and another for content DBs

This is great, especially the advice on Cost Threshold for Parallelism, which I have never seen elsewhere. Off to change this on test servers right now. Many thanks Klaus.

Thanks for your great feedback, John!

Hi Klaus,

I’d also always go with enabling “optimize for ad hoc workloads” and enable “backup compression default” – assuming you’re not running SQL2005 🙂

Paul K

Hello Paul,

These are also 2 *great* options that you should *always* enable.

Unfortunately I had to concentrate in this blog posting on the 3 most prominent ones 🙂

Thanks!

-Klaus

Hello and great article!!

I just wanted to add a comment on the Max/Min memory. When the server has more or less memory then 64GB then use this formula to calculate MAX/MIN memory.

1. Reserve 1 Gigabyte (GB) of RAM for the Operating System (OS)

2. Reserve 1GB of RAM for each 4GB of RAM installed from 4 – 16 GB

3. Add 1GB of RAM for every 8GB of RAM above 16GB’

4. For MIN memory setting, it is a good start to set to half the total MAX memory.

I have created a script the memory setting at my blog “http://sqlsurgeon.blogspot.ca/2011/12/automatically-calculate-max-memory.html”

Thanks for you work.

Rudy

Hello Rudy,

Thanks for your great comment.

Just 2 remarks:

1. As a rough formula you should give the OS at least 10% of the memory

2. I would leave the Min Server Memory setting as it, because otherwise you get can into some troubles in combination with Locked Pages in Memory – read through the comments section at https://www.simple-talk.com/sql/database-administration/great-sql-server-debates-lock-pages-in-memory.

Thanks,

-Klaus

I think it’s better to remark that this formula comes from a Jonathan Kehayias’ recommendation, as everybody can see on his post http://www.sqlskills.com/blogs/jonathan/how-much-memory-does-my-sql-server-actually-need/

It’s always a good thing to quote the originals…

how do you explain microsoft best practice for their own product like BizTalk –

msdn.microsoft.com/en-us/library/cc296892(v=bts.10).aspx,

SharePoint – https://technet.microsoft.com/en-us/library/hh292622.aspx#Anchor_1,

CRM Dynamics – https://blogs.msdn.microsoft.com/crminthefield/2012/10/10/crm-upgrade-best-practices/

Hello Sharon,

These are different Microsoft product teams, which have no idea about SQL Server 😉

Thanks,

-Klaus

Great post.

I do have a question though.

Is there a way to change these parameters AT install instead after SQL Server is already installed?

I was looking for an unattended install because we need to create a bunch of servers and I thought that it would be great these parameters could be changed at install time as well.

Thanks in advance for any feedback!

Abraham

Great post. Thanks a lot. Just to perfect your work, you might show pictures with the correct settings (example) instead the default 😉