It happened silently, and we lost almost everything – our RAID 5 storage array crashed! No, I’m not talking about someone else, I’m talking today about our RAID 5 array at home, why it failed, and why I failed!

RAID 5 – the basics

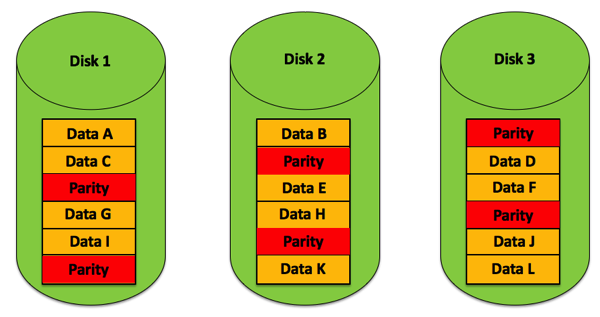

A RAID 5 array is the cheapest solution if you want to have redundancy: it “costs” you just one disk as overhead where the storage controller writes parity information. With this parity information the storage controller is able to recreate the data on a disk when *one* disk crashes. The following picture illustrates this concept.

A RAID 5 array is great if you store a huge amount of data, because you just need one additional disk to provide redundancy. In our case at home we use a 4-bay QNAP storage for all of our personal data (mostly for pictures, movies, downloads, Time Machine backups). The 4-bay QNAP storage was equipped with 4x 2 TB Western Digital drives, which gave us 6 TB of storage space in a RAID 5 configuration – this sounds great but isn’t that much when you take a huge amount of pictures in a RAW format…

Our backup strategy

As Scott Hanselman writes you should have a 3-2-1 backup strategy:

- 3 different backups

- 2 different formats

- 1 offsite backup (like the famous so-called “cloud”)

In our case the backup strategy was quite simple: we had a backup on a different RAID 1 enclosure, and the most important stuff (pictures, movies) was also stored on our Google Drive account – but these additional backups were several months old…

The crash

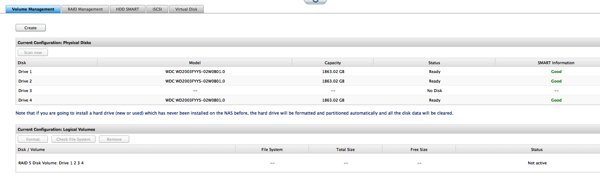

2 weeks ago I wanted to access our storage, but I wasn’t able to connect. Therefore I logged into the management console, and there I saw that the RAID 5 volume was offline:

I immediately panicked! Not active??? When you lose one disk in a RAID 5 array, the volume should go into the Degraded Mode. But offline? What the f… happend here? Next I checked the QNAP storage logs. According to the log the 2nd drive was kicked out of the RAID 5 volume several weeks ago, when we had a power outage.

78,”Warning”,”2015-07-07″,”04:35:03″,”System”,”127.0.0.1″,”localhost”,”[RAID5 Disk Volume: Drive 1 2 3 4] RAID device in degraded mode.”

77,”Information”,”2015-07-07″,”04:35:03″,”System”,”127.0.0.1″,”localhost”,”[RAID5 Disk Volume: Drive 1 2 3 4] Drive 2 removed.”

76,”Error”,”2015-07-07″,”04:34:52″,”System”,”127.0.0.1″,”localhost”,”Drive 2 plugged out.”

Since July 7 our RAID 5 volume had been in degraded mode but we hadn’t known it. I will talk about this later in more detail. And on July 29 the disaster kicked in:

80,”Warning”,”2015-07-29″,”15:10:35″,”System”,”127.0.0.1″,”localhost”,”[Harddisk 3] medium error. Please run bad block scan on this drive or replace the drive if the error persists.”

79,”Warning”,”2015-07-29″,”15:10:06″,”System”,”127.0.0.1″,”localhost”,”[Harddisk 3] I/O error, sense_key=0x3, asc=0x0, ascq=0x0, CDB=28 00 45 f5 b1 08 00 00 80 00 .”

In addition to the degraded mode we had I/O errors on the 3rd drive. Boom! The RAID 5 volume was gone. And our oldest backup was several months old!

The recovery

The RAID 5 volume was not available anymore, and the oldest backup (onsite and cloud) was several months old. How do we get back the last 6 months of data? The QNAP itself is based on Linux. I have no idea about Linux, so I was immediately out of luck and knowledge. Therefore I opened a support case at QNAP. In the mean time I had already ordered a few additional 2 TB disks, so that I could replace the faulty disks.

After a day I got a response from QNAP, and a support engineer logged into our QNAP through a Team Viewer session. He checked the status of the various drives through a SSH session. The outcome was that the 3rd drive was completely dead, and it seemed that the 2nd drive had “some” corrupt sectors. In the first step we replaced the 3rd drive with a spare volume that I already got in the meantime (this was an express shipment…). And finally the support engineer did some fancy magic through the SSH session and was able to bring the 2nd drive with the corrupt sectors online as well.

And there it was: our RAID 5 array was back in the degraded mode, and started the resynchronization! The sychronization took around 24 hours, and afterwards I immediately backed up all the data that was missing in our 2 other backups. In sum we have lost a few files and changes to files because of the corrupt sectors on the 2nd drive. But we were able to recover almost all of our data!

Lessons learned

There are so many lessons learned from this storage crash, which can be also applied to SQL Server installations. Let’s talk about them in more detail.

- *FORGET* about RAID 5!!! As soon as *one* disk dies, you are in the degraded mode, and one additional disk failure brings your RAID 5 volume down! I would have never ever expected that 2 drives would fail within a few weeks. Our new storage configuration is now based on a RAID 10 volume (with 4x 4 TB drives), which gives us 8 TB of storage space, and 2 drives can fail without any problems – as long as they are not from the same mirror pair!

- *NEVER EVER* buy all your disks from one single shop! As you have seen from this story, 2 drives crashed within 3 weeks! When one disk crashed, there is also a very good chance that the other disks (from the same shipment) also have problems. Therefore I have now bought the 4 individual drives from *4* different shops – maybe I’m a little bit paranoid now, but I don’t want to take this risk again.

- Make sure that you have spare disks available! It was almost a „Mission Impossible“ to get new 2 TB disks within a few hours, so that I could replace the faulty drives. Therefore you should always make sure that you have some spare disks immediately available. Then you are able to replace crashed disks ASAP without waiting and praying to god…

- You need up-to-date backups! Sounds logical, but almost nobody is doing that at home. And a RAID 5 volume is not a backup! It provides you some basic high-availability, but if more than 1 disk crashes, you have a huge problem. You also have to think about disaster recovery – how do you get back your data in the case of a failure? Therefore it is very, very important that you have up-to-date backups. Even offsite, when your complete house burns down… I know so many SQL Server databases across the world, where the most recent backup is also several months old… Or databases without any backups…

- You need alerts! The QNAP storage told us that we were in the degraded mode, but we didn’t listen. Therefore it is also very important that you configure your alerts accordingly. And the same applies to your SQL Server installation: it doesn’t help you at all if you regularly run database consistency checks but nobody takes any notice of the output of those jobs. You have to configure your alerts accordingly so that SQL Server tells you actively when there are problems like I/O errors, etc.

Summary

We had a huge amount of luck, and our data loss was quite small. In conclusion we have now reconfigured the QNAP storage to a RAID 10 volume and I use disks from different shops just to be sure (as far as I can) that the disks do not crash at the same point in time again. And please make backups regularly (which you also test with a restore!), and configure your alerts accordingly!

Thanks for your time,

-Klaus