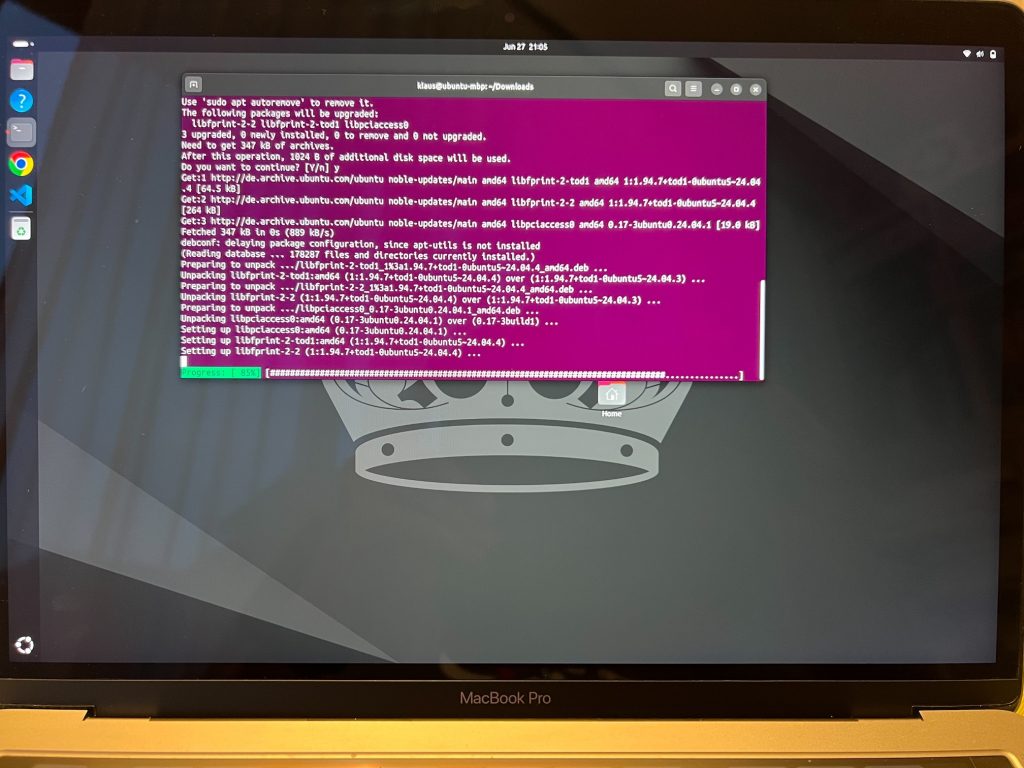

Switching My 2019 MacBook Pro to Linux: The T2-Ubuntu Journey

After years of using macOS, I recently decided to install Linux on my old (unused) 13-inch MacBook Pro from 2019 – the one with the infamous Touch Bar and Apple’s T2 security chip. It wasn’t just an experiment. I wanted a fully working x64 based Linux desktop without buying any new hardware, and therefore I …

Switching My 2019 MacBook Pro to Linux: The T2-Ubuntu Journey + read more