In this and next week I want to talk about a very important performance tuning topic in SQL Server: Recompilations. As you will see they can happen when you execute very simple stored procedures. Today I want to lay out the foundation of recompilations in SQL Server, why they have to happen, and next week I will show you how you can avoid them by rewriting your stored procedures in a different way.

Recompilations – what?

Before I want to talk about the specifics of recompilations in SQL Server, I want you to have a more detailed look at the following simple stored procedure.

CREATE PROCEDURE DemonstrateTempTableRecompiles AS BEGIN CREATE TABLE #TempTable ( ID INT IDENTITY(1, 1) PRIMARY KEY, FirstName CHAR(4000), LastName CHAR(4000) ) INSERT INTO #TempTable (FirstName, LastName) SELECT TOP 1000 name, name FROM master.dbo.syscolumns SELECT * FROM #TempTable DROP TABLE #TempTable END GO

As you can see from the code, there is nothing special about it. In the first step a temp table is created. Afterwards some records are inserted into the Temp Table, and finally the inserted data is retrieved with a simple SELECT statement. Code that you have already written hundreds – maybe thousand times in SQL Server. Let’s create in the first step an Extended Event session that tracks recompilations. The following code shows the definition of this event session.

CREATE EVENT SESSION [TrackRecompiles] ON SERVER

ADD EVENT sqlserver.sql_statement_recompile

(

ACTION

(

sqlserver.plan_handle,

sqlserver.sql_text

)

)

ADD TARGET package0.event_file(SET filename = N'c:\temp\TrackRecompiles.xel')

WITH

(

MAX_MEMORY = 4096 KB,

EVENT_RETENTION_MODE = ALLOW_SINGLE_EVENT_LOSS,

MAX_DISPATCH_LATENCY = 30 SECONDS,

MAX_EVENT_SIZE = 0 KB,

MEMORY_PARTITION_MODE = NONE,

TRACK_CAUSALITY = OFF,

STARTUP_STATE = OFF

)

GO

-- Start the Event Session

ALTER EVENT SESSION TrackRecompiles

ON SERVER

STATE = START

GO

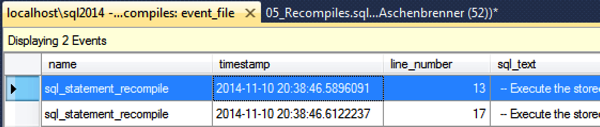

When you now execute the stored procedure you will see that that 2 Recompilations have happened during the runtime.

A Recompilation means that SQL Server recompiled the execution plan during the execution itself. This introduces additional CPU overhead that will finally decrease the throughput of your workload. But for now the real question is why these recompilations have happened?

SQL Server mainly performs recompilations under the following 2 circumstances:

- Schema Changes

- Statistic Updates

And because of these 2 circumstances we have triggered the recompilations in the previous executed stored procedure. When you look again at the stored procedure definition, you can see that we have created in the first step a temp table. When you create a temp table in TempDb, you change your database schema, and because of this reason the first recompilation has happened.

And after the creation of the temp table, you have inserted some rows into it. Therefore SQL Server had to update the underlying statistics object of the Clustered Index of the temp table – the Clustered Index was created through the PRIMARY KEY constraint by SQL Server. A simple stored procedure caused 2 recompilations in SQL Server – wow!

Summary

Temp tables can have a huge performance overhead caused by recompilations. On the other hand temp tables have accurate statistics that helps the Query Optimizer to produce better performing execution plans. Therefore temp tables only make sense when you deal with a larger set of data. For a smaller set of data the CPU overhead caused by the recompilations would be larger as the performance gain through the statistics.

In next weeks blog posting I will introduce Table Variables to you, and here we will see how they can avoid the nasty side-effects of recompilations – and what other performance problems they can introduce. Stay tuned.

Thanks for your time,

-Klaus