As you might know from my Home Lab setup, I’m a big believer and fan of VMware vSAN, which is a Software-Defined Storage solution. Therefore I want to show you in today’s blog posting a step-by-step guide how you can create a vSAN based vSphere Cluster. For a basic understanding about the inner workings of vSAN, please refer to a previous blog posting from me.

ESXi Hosts and Cluster Overview

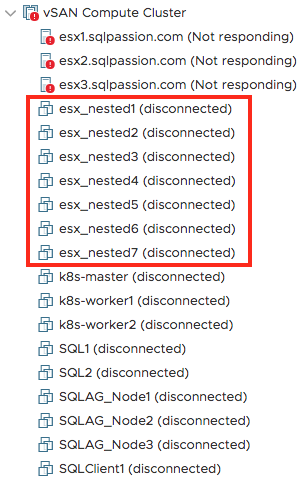

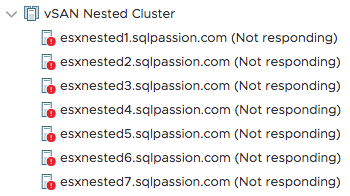

Because I wanted to have a vSAN Cluster for learning VMware NSX-T and PKS, I have decided to create an additional nested vSAN Cluster consisting of 7 individual nested ESXi host deployments. In this additional isolated environment I can test-out everything without any side-effects to my physical vSphere deployment:

- esxnested1.sqlpassion.com: 192.168.1.171

- esxnested2.sqlpassion.com: 192.168.1.172

- esxnested3.sqlpassion.com: 192.168.1.173

- esxnested4.sqlpassion.com: 192.168.1.174

- esxnested5.sqlpassion.com: 192.168.1.175

- esxnested6.sqlpassion.com: 192.168.1.176

- esxnested7.sqlpassion.com: 192.168.1.177

On each ESXi host I have installed ESXi 6.7 Update 1, the latest release of the VMware hypervisor. One of the most important things to remember here is that nested vSAN Datastores are currently not really working. This just means that you can’t store the VMDK files of the nested ESXi Hosts in a vSAN Datastore. I have started with that approach in the beginning, but afterwards I was not able to successfully create the vSAN Datastore.

David Chung told me that the root cause of this problem was the nesting of the vSAN Datastores. Therefore I have bought a Samsung 970 PRO 1 TB NVMe SSD (Amazon Prime FTW!) and mounted that one (through a previously bought PCIe adapter) in one of my HP Servers, and created a traditional VMFS Datastore on top of it.

This (superfast) datastore is now used to store the various VMDK files of the 7 nested ESXi deployments. Maybe I will buy 2 additional NVMe SSDs for the 2 remaining HP Servers, so that I can also demonstrate superfast Storage vMotion operations between the disks…

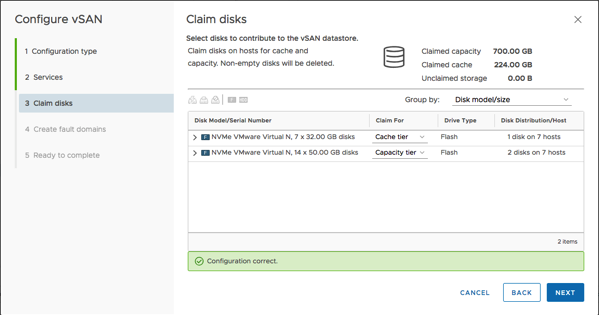

I’m using on each ESXi Host the following 4 VMDK files, which are attached to an NVMe controller:

- 1x 4 GB: Boot Drive for ESXi

- 1x 32 GB: Caching Device for the vSAN Datastore

- 2x 50 GB: Capacity Device fo rthe vSAN Datastore

After I have done the installation of the individual ESXi hosts, I have configured the Management Network accordingly and made sure that each host is able to ping each host. Just to be on the safe side…

Finally I have created a simple Cluster within my vCenter and added the 7 ESXi Hosts into it (the screenshot was taken when the nested ESXi Hosts where powered down):

Distributed Switch Setup

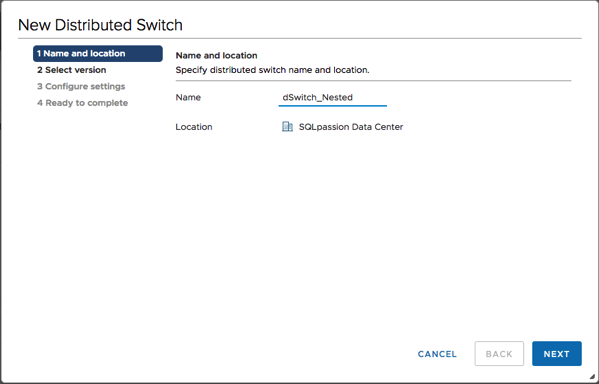

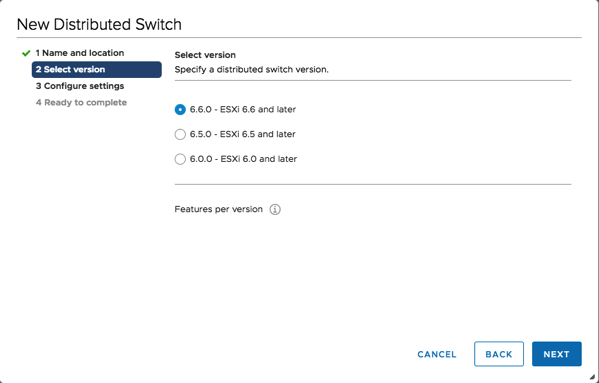

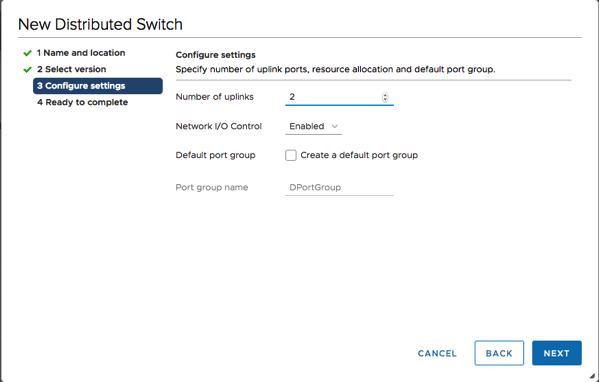

Before you actually create a vSAN Datastore, you need to configure a Distributed Switch with a Port Group for vSAN. The great thing about vSAN is that it also includes a license for the Distributed Switch. The following pictures shows the basic configuration of the Distributed Switch.

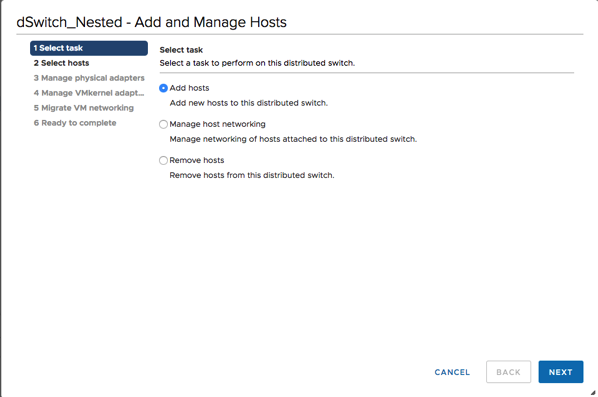

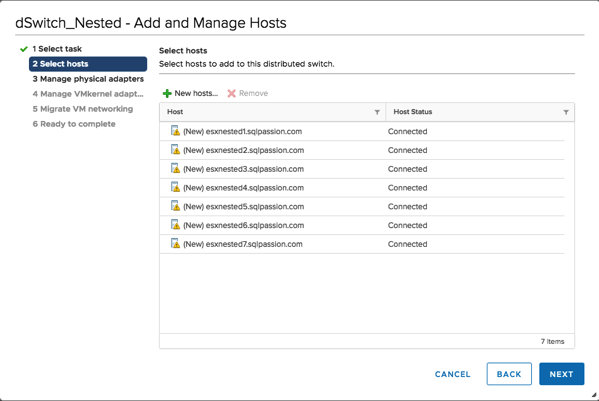

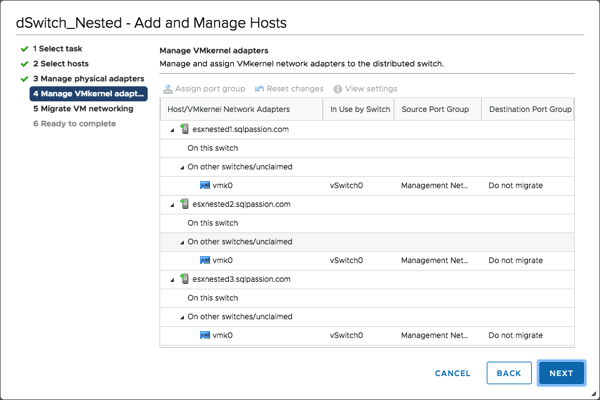

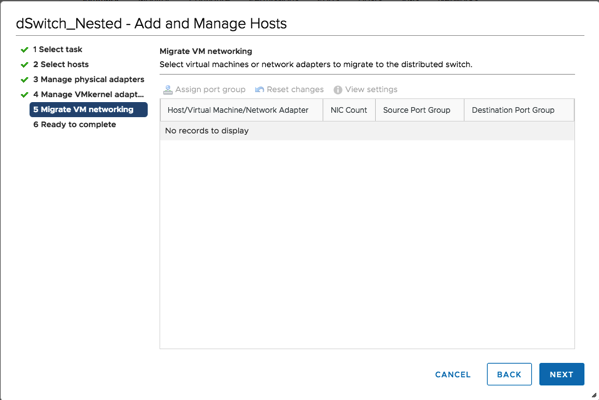

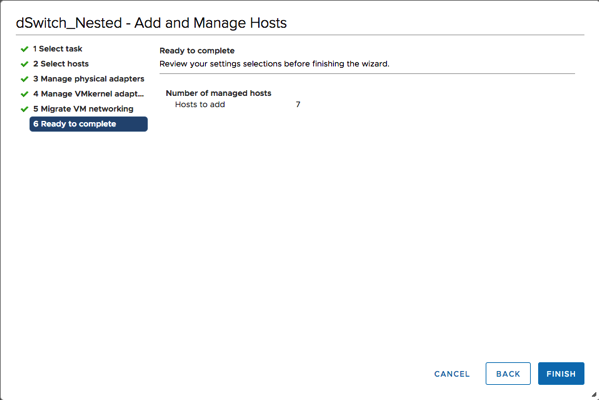

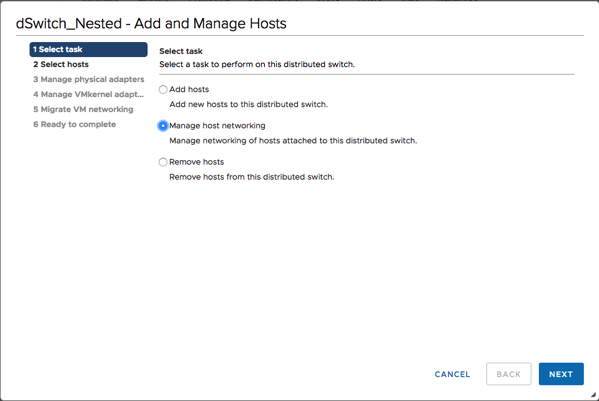

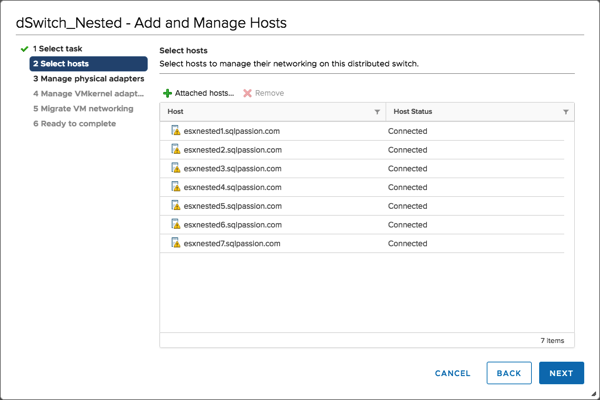

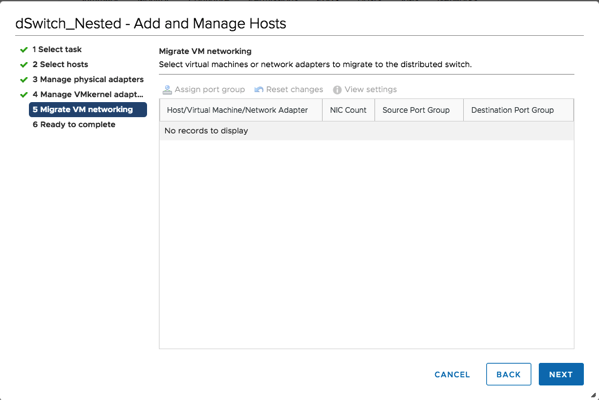

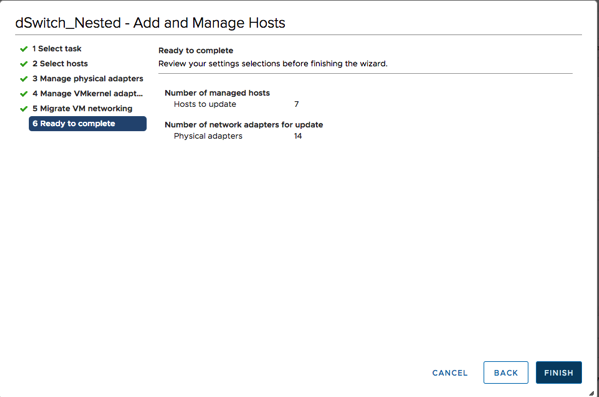

After the creation of the Distributed Switch, I have added the 7 nested ESXi Hosts into the Distributed Switch, as you can see from the following pictures.

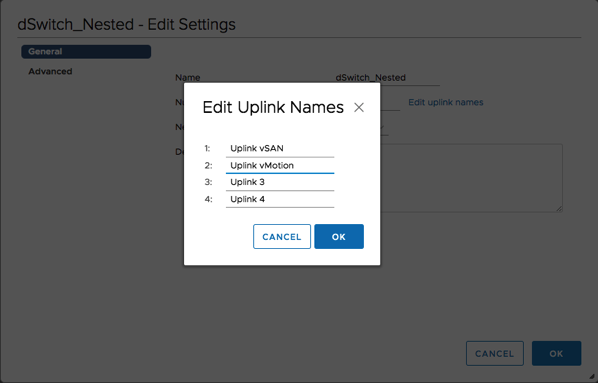

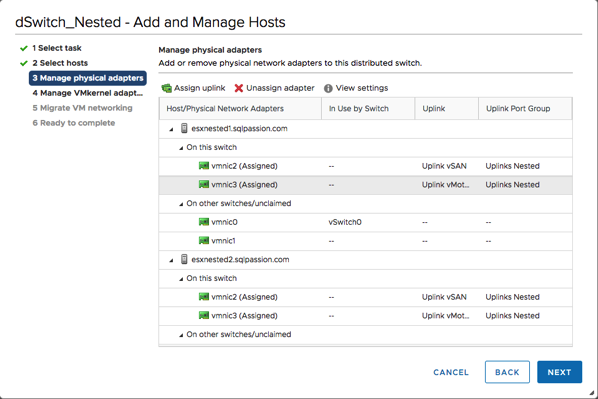

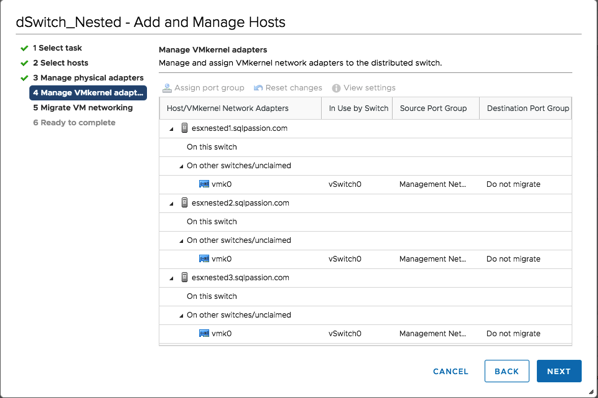

As you can also see I have left the Management Network on a Standard Switch. After I have added the 7 ESXi Hosts to the Distributed Switch, I have also changed the names of the Uplinks accordingly:

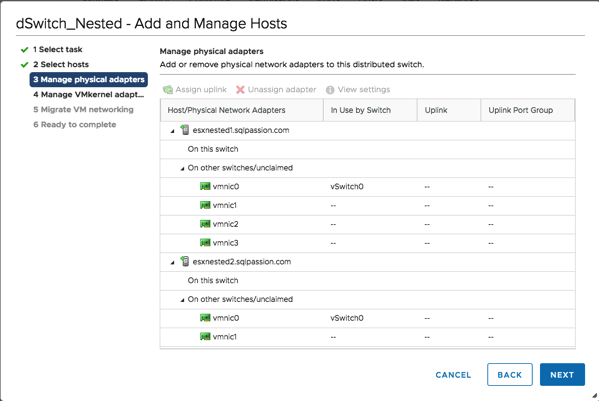

As a next step I have assigned the Uplinks to the NICs of the ESXi Hosts:

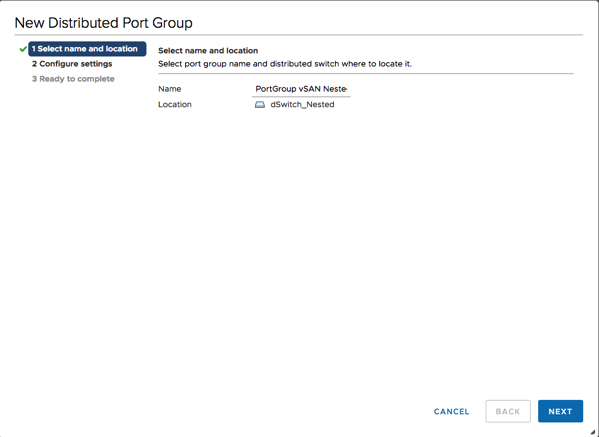

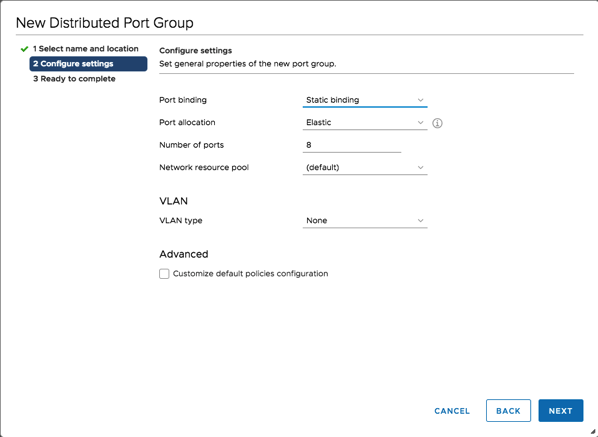

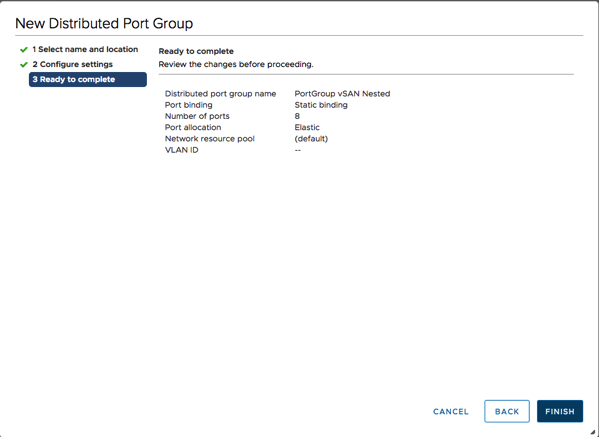

As a preparation for the vSAN setup I have afterwards created a new Distributed Port Group dedicated to vSAN, as you can see from the following pictures.

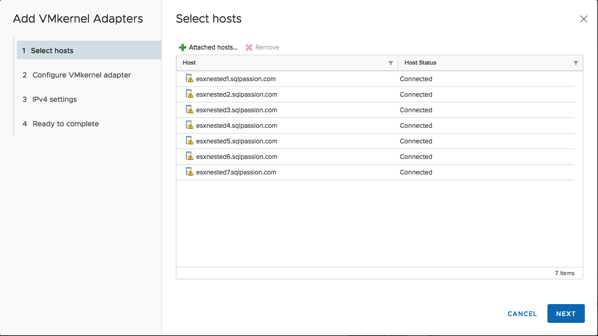

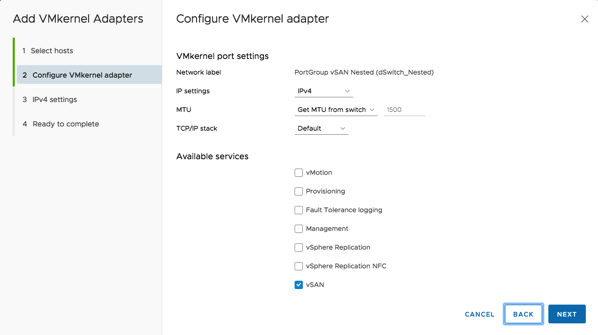

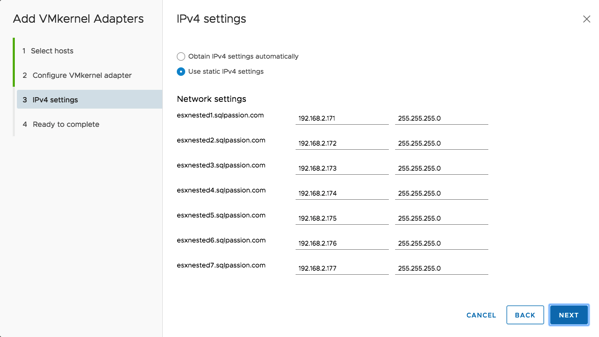

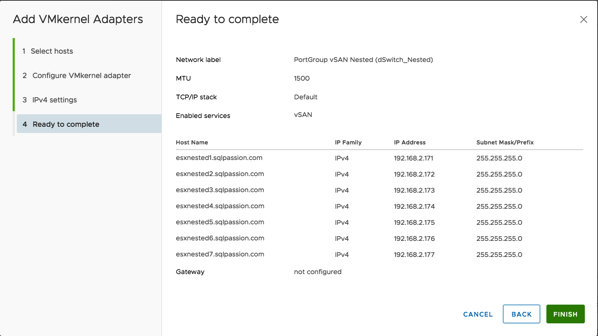

And finally I have added the VMkernel Adapter for vSAN to the Distributed Port Group. I’m using here IP adresses in the 192.168.2.0/24 subnet.

After the VMkernel Adapter was successfully added to the Distributed Port Group, I have tried a vmkping command on each ESXi Host (through the SSH shell) if the vSAN VMkernel Adapter can communicate with each one):

vmkping -I vmk1 192.168.2.172

When the working network communication was successfully tested, I have moved on to the configuration of the vSAN setup itself.

vSAN Setup

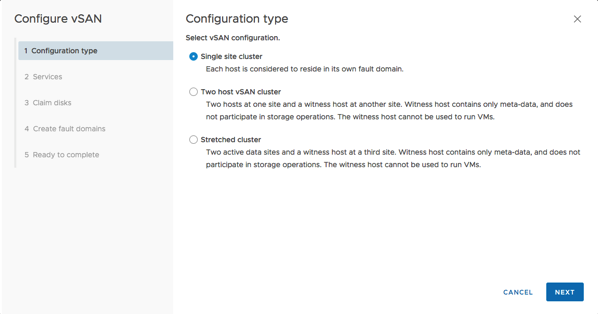

Let’s continue now with the vSAN setup. To make things easy, I have created a simple Single Site Cluster, as you can see from the following picture.

Afterwards I was really amazed that vSAN automatically chose the correct disks on each ESXi Host for the Caching and Capacity Devices. This is a huge improvement to previous versions:

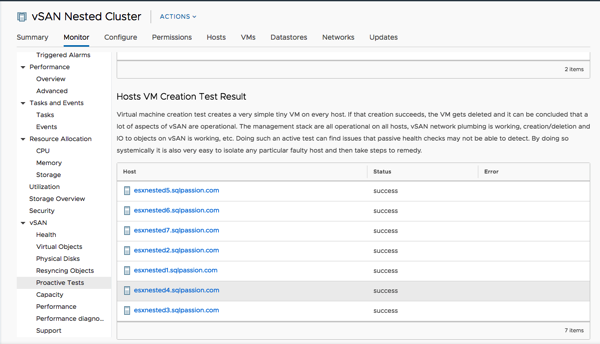

As soon as vSAN was configured successfully, I have verified the functionality of the vSAN Datastore with the built-in test.

Summary

As you have seen in this blog posting, the successful creation of a vSAN powered Cluster is not really a big deal. The most work was the configuration of the Distributed Switch. If you are too lazy to perform all these necessary steps, you can also use the Automatic vSphere Lab Deployment from William Lam, where a whole nested vSphere deployment is just created automatically through the PowerCLI.

Thanks for your time,

-Klaus