Today I want to talk more about Plan Caching and its side-effects in SQL Server. As you might know, every logical query submitted to SQL Server is compiled into a physical execution plan. That execution plan is afterwards cached in the so-called Plan Cache for further reuse. Let’s talk in the first step about adhoc SQL statements and which side-effects and performance problems they can introduce.

Adhoc SQL Statements

Every time when you submit an adhoc SQL statement to SQL Server, an execution plan gets compiled for every unique query. What do I mean by “unique query”? The answer is very simple: SQL Server creates a hash value across the complete SQL statement (incl. possible hard-coded parameter values), and uses this hash value as a lookup value into the plan cache. If an execution plan is found with this hash value, the plan is reused, otherwise a new plan is compiled and finally cached in the plan cache. Imagine you submit the following 3 queries to SQL Server:

SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID = 11000 GO SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID = 30052 GO SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID = 11223 GO

For these 3 queries SQL Server compiles 3 different execution plans, because you have provided a hard-coded parameter value. Therefore the calculated hash value differs between these 3 queries, and no cached plan is found. As a side-effect you now have 3 plans for the almost identical queries in the plan cache. This specific problem is called Plan Cache Pollution.

You are just polluting your plan cache with various execution plans, which are hard to reuse (because of the hard-coded parameter values) and you are wasting a lot of useful memory which could be used by other components within SQL Server. The goal of caching should be always a high reuse count, which isn’t the case with a lot of adhoc SQL statements.

Plan Stability

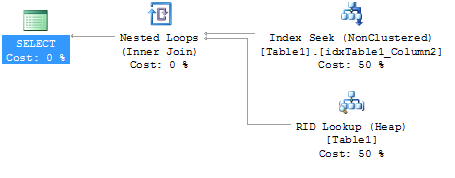

Imagine you are using parameter values for your SQL statements, or you are even using stored procedures. In that case, SQL Server can reuse cached execution plans very easily. But even with the reuse of a cached execution plan you can introduce performance problems. Imagine SQL Server compiles an execution plan for a query, which has to perform a Bookmark Lookup, because the used Non-Clustered Index isn’t covering your query:

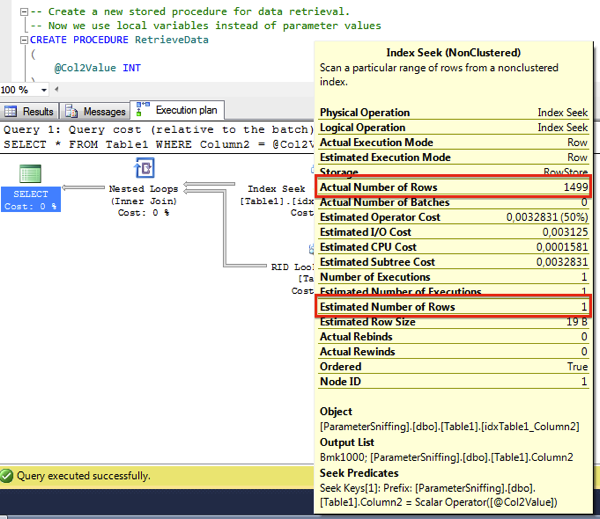

As we have already said in week 8, a Bookmark Lookup only makes sense if you are retrieving a few records from your table. If you are over the Tipping Point, it is more cost effective to do a complete Table- or Clustered Index Scan. But if SQL Server is reusing a cached execution plan, that decision isn’t taken into consideration anymore – SQL Server just blindly reuses your plan – even when your performance will be awefully slow! Look at the following actual execution plan:

Here SQL Server has blindly reused a cached plan with a Bookmark Lookup. As you can see the estimated and actual number of rows are quite different here! SQL Server has compiled and cached that plan based on the assumption that only one rows is returned from that query. But in reality we get 1499 rows back from SQL Server. You are just looking at a plan which was optimized under the assumption that only one row is returned – think about that.

The underlying root cause here is that you don’t have a Plan Stability. Based on the estimated number of rows, you get a cached plan with a Bookmark Lookup, or a Table/Clustered Index Scan if you are over the Tipping Point. That’s the most common performance problem that I see when I’m dealing with bad performing SQL Servers at customer sites.

How can you fix that specific problem? Easy: avoid the Bookmark Lookup through a Covering Non-Clustered Index. With that approach you have achieved plan stability, and regardless of the first provided input parameter you always get the same plan with the same performance. If you are interested in learning more about that problem, I suggest that you watch my one-hour video Parameter Sniffing through the SQLpassion Online Academy.

Summary

Today you have seen that plan caching is a double-edged sword in SQL Server: on one hand, plan caching is a powerful concept because you can reuse already compiled plans to avoid compilation costs. On the other hand it is very dangerous, because with certain shapes of execution plans, you don’t have plan stability, which means you can’t guarantee performance anymore

Thanks for your time,

-Klaus