In todays’ blog posting I want to talk about the memory optimized file group used by In-Memory OLTP to achieve durability, and how to configure it for high performance throughput. Before we go down to the details, I want to give you a brief overview of how In-Memory OLTP achieves durability with this specialized file group in your database.

Durability for In-Memory OLTP

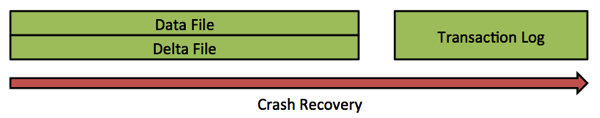

One of the big misconceptions in the early days of In-Memory OLTP (formerly codenamed Hekaton) was the fact that people thought that In-Memory OLTP doesn’t provide ACID transactions – only ACI ones without the aspect of Durability. But this isn’t the truth, because every operation done in memory is fully logged in In-Memory OLTP. If your database crashes, In-Memory OLTP is able to bring your database and your memory optimized tables up to the state before the crash occurred. In-Memory OLTP logs every operation to the traditional transaction log of SQL Server. Because everything happens in non-durable memory based on the MVCC principles, In-Memory OLTP only logs redo log records, and no undo log records at any time, because an undo-phase will never ever happen during crash recovery with In-Memory OLTP. And to perform a rollback of an in-flight transaction, the previous version is always available.

Redo log records will also be only written when a transaction is committed. In addition the so-called Offline Checkpoint Worker populates successfully committed transactions from the transaction log into a pair of so-called Data and Delta Files. As with your regular SQL Server data file, the data and delta files from In-Memory OLTP are there to speed up crash recovery. Crash recovery constructs your memory optimized tables initially from the data and delta file pairs, and afterwards all changes which occurred since the last checkpoint process are applied from the transaction log. Let’s have a look at this concept.

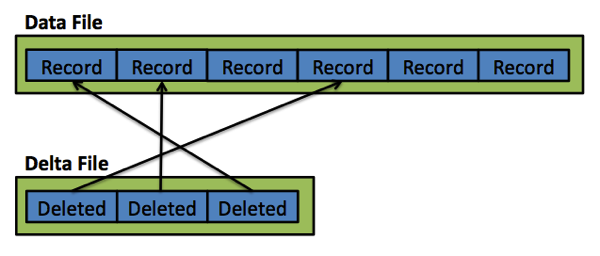

Because everything is about maximum performance in In-Memory OLTP, the data and delta file pairs are only written with sequential I/O by SQL Server. There is no random I/O involved, because that would kill the performance. The question is now, what information is written to the data and delta file pairs? The data file of In-Memory OLTP consists of the records that were inserted into your memory optimized table. Because inserts only happen at the end of the file, sequential I/O can be achieved very easily. When you delete a specific record in your memory optimized table, that record is marked as logically deleted in the corresponding delta file. That information is also always added at the end of the delta file, which leads again to true sequential I/O in the storage. And an UPDATE statement is just a combination of an INSERT and a DELETE operation of the new and old copy of the record. Easy isn’t it? The following picture illustrates this very important concept.

Tony Rogerson (Blog, Twitter) has also written a very detailed blog posting about how durability is achieved with In-Memory OLTP. I highly recommended that reading material for a further deep dive on the topic!

Configuring the In-Memory OLTP file group

Crash recovery of memory optimized tables is performed at the speed of the storage where your data and delta file pairs are stored. For that reason it is very important to be careful, when you create your database, when considering and deciding on the configuration of the In-Memory OLTP file group that stores the file pairs. In the first step you always have to add a new file group that contains memory optimized data, as you can see in the following listing:

-- Add a new memory optimized file group ALTER DATABASE InMemoryOLTP ADD FILEGROUP InMemoryOLTPFileGroup CONTAINS MEMORY_OPTIMIZED_DATA GO

After the creation of the memory optimized file group (which is under the covers a traditional FILESTREAM file group), you can add a storage container into the file group. You can use the ADD FILE command here as demonstrated in the following listing:

-- Add a new storage container ALTER DATABASE InMemoryOLTP ADD FILE ( NAME = N'InMemoryOLTPContainer', FILENAME = N'C:\Program Files\Microsoft SQL Server\MSSQL12.SQL2014\MSSQL\DATA\InMemoryOLTPContainer' ) TO FILEGROUP [InMemoryOLTPFileGroup] GO

Almost all examples that you currently encounter in the various blog postings around the world just add 1 storage container – and that will hurt your performance tremendously! The reasons are very simple:

- Data and Delta files are stored on the same physical drive

- Writing to Data and Delta files on the same physical drive leads to random I/O

- Crash recovery can be only as fast your one physical drive, where the Data and Delta files are stored

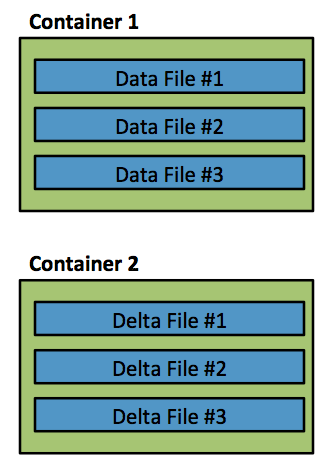

To overcome all these limitations, you can add multiple storage containers on different *physical* drives to the memory optimized file group. In that case, Data and Delta files are allocated in a round-robin fashion between the multiple storage containers. Imagine what happens when you configure 2 storage containers:

- The 1st data file goes into the 1st storage container

- The 1st delta file goes into the 2nd storage container

- The 2nd data file goes into the 1st storage container

- The 2nd delta file goes into the 2nd storage container

The following picture illustrates this concept to you in more detail.

But using only 2 storage containers doesn’t solve your disk bottleneck problem, because with only 2 containers, all your data files are stored in the 1st container, and all your delta files are stored in the 2nd container. Normally your data files are quite a bit than your delta files, which means your I/O will not be balanced between both storage containers on different physical drives. The 1st storage container with all of your data files will need to provide more IOPS than the 2nd one with all your delta files.

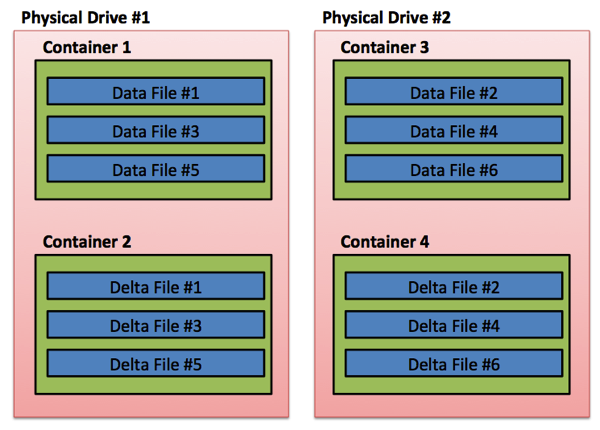

To distribute and balance the IOPS between multiple physical drives evenly, Microsoft recommends that you use at least 4 storage containers for your memory optimized file group. Imagine what happens when you use 4 storage containers, spread across 2 physical drives, as illustrated in the following picture:

If you use that setup, you have the 1st file pair (data & delta files) on the 1st physical drive, the 2nd file pair (data & delta) on the 2nd physical drive, the 3rd file pair on the 1st physical drive, and so on. You have finally evenly spread your I/O requirements across multiple physical drives, which will speed up the crash recovery process, because crash recovery can process the storage containers in parallel, which brings your database online faster.

Summary

As you have seen in this blog posting, storage speed and throughput is still very important for In-Memory OLTP. Your data must be still persisted physically in the storage, otherwise the data would be lost when your SQL Server crashes or when you perform a restart. The configuration of your memory optimized file group has a huge impact on the throughput that the file group gives you. If you run with In-Memory OLTP in a production environment, you should have at least 4 storage containers spread across 2 physical drives. In more performance demanding scenarios you can even think about adding more storage containers on more than 2 physical drives. As Microsoft says: “Crash recovery in In-Memory OLTP is performed at the speed of your storage”.

Thanks for reading!

-Klaus

7 thoughts on “Configuring the In-Memory OLTP File Group for High Performance”

I asked Sunil at TechEd if any thought was put into providing options where you could specify data files on one drive and delta files on another. Nope, seems like I’m the only one who has thought of that, so he suggested to put it on Connect:

http://connect.microsoft.com/SQLServer/feedback/details/874058/allow-configuration-option-to-separate-data-and-delta-files

So, if I understood correclty, the design proposed in the article is not currently possible (as of SQL 2014 RTM)?

Hello Leo,

Thanks for your comment.

No, the proposed design is possible with SQL Server 2014 RTM.

You just have to configure multiple storage containers for the In-Memory OLTP file group.

Thanks

-Klaus

Klaus, continuing with the LeoPasta’s comment, can you provide an example (TSQL) showing us how you properly configure the filegroup?

Thanks in advance.

Klaus,

On reviewing your blog entry, can you explain why it would not be more efficient to have containers 1 and 4 on disk 1 and containers 2 and 3 on disk 2. This approach would ensure the data and delta pairs would never be on the same disk (achieving total sequential IO) and balance data files across both disks. Granted, this may slow crash recovery as the parallel process will lead to random IO but for some crash recovery time is less important than maximum throughput during normal operation. Am I missing something?

Regards,

Andrew

Hello Andrew,

Thanks for your comment.

During normal operations almost everything happens with In-Memory OLTP in memory (besides the transaction logging).

Even the CHECKPOINT process is further optimized for it.

Therefore, if you have multiple containers, you can crash recover your In-Memory OLTP tables with the speed of your storage.

Thanks,

-Klaus

Hi,

our database is located on a flash-storage.

1) In this case, is one container enough?

2) Especially for in-memory table types, is one container enough, because they have no component on disk?

Regards,

Mark