If you want to get your hands dirty with SQL Server 2019 Big Data Clusters, the easiest way is to deploy it in Azure Kubernetes Services. But there are also other options available. You can deploy a single-node or multi-node SQL Server 2019 Big Data Cluster in locally hosted Virtual Machines within your own Data Center. Because I have already a larger VMware vSphere deployment available in my Home Lab, I tried to deploy a multi-node Big Data Cluster in my own Home Lab

In today’s blog posting I want to show you the necessary steps that you have to do, and a few lessons learned from my side.

Prerequisites

A SQL Server 2019 Big Data Cluster is more or less a product within a product. The functionality and features that you are getting after a successful deployment are *huge*, and there are so many different new concepts that you have to learn.

The whole deployment of a SQL Server 2019 Big Data Cluster happens within a Kubernetes Cluster. Kubernetes (or k8s) is an open source Container Orchestration framework that generates currently a lot of attention. Therefore, the first prerequisite to be able to deploy a SQL Server 2019 Big Data Cluster is the existence of a Kubernetes Cluster. Microsoft provides here an article that describes how to deploy a 3-node Kubernetes Cluster in Ubuntu 16.04/18.04 Virtual Machines.

As you can already see here, the hardware prerequisites for these Virtual Machines are quite high:

- 8 vCPUs

- 64 GB RAM

- 100 GB Storage

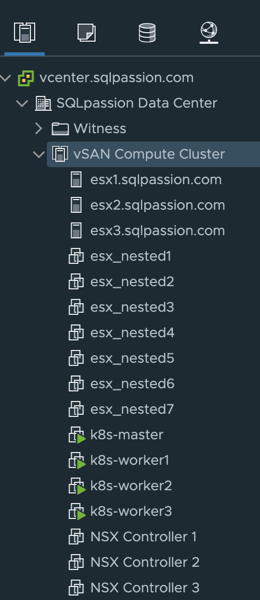

If you are deploying a Kubernetes Cluster for production use, you should also make sure to deploy multiple master nodes to make the cluster highly available. To make things easy I have deployed in my case 1 master node, and 3 worker nodes:

After you have done your Kubernetes Cluster deployment, you have to deal with the next requirement: you have to have some kind of Persistent Storage for your Kubernetes Cluster. In Azure Kubernetes Services (AKS) this is quite easy, because AKS provides you built-in storage classes with dynamic provisioning.

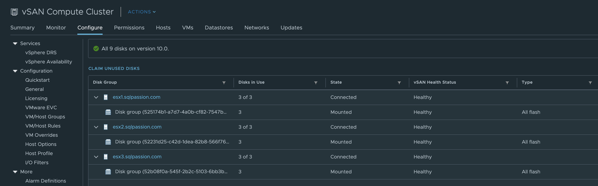

If you have your newly deployed Kubernetes Cluster up and running, there is no Persistent Storage available, because you have to plug-in your own providers. But which provider do you use? When you follow my blog over the years, you already know that I’m a huge fan of VMware vSAN, which is a hyperconverged Storage Solution directly built into the ESXi Hypervisor. And I have a production ready vSAN implementation up and running in my Home Lab. Therefore, it would be great to use Persistent Storage that integrates with VMware vSAN.

Fortunately, VMware vSphere 6.7 Update 3 introduces the Kubernetes vSphere Cloud Provider, which provides so-called “Out-of-Tree Providers” that are plugging Persistent Storage via Kubernetes Pods into your Kubernetes Cluster. Unfortunately, my Home Lab was still on VMware vSphere 6.7 Update 1, so I had to upgrade everything to Update 3:

- vCenter Virtual Appliance

- All 3 ESXi Hosts

- vSAN Datastore

This took me some (unplanned) time, but at the end everything worked as expected, and my VMware vSphere Home Lab is also running on the latest version:

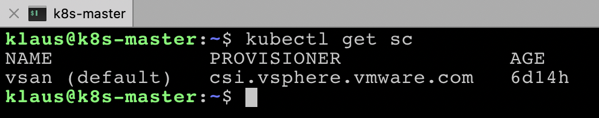

After I have done the upgrade, I followed the installation guidelines for the Kubernetes vSphere Cloud Provider exactly step-by-step (this is very, very important!!!), and finally I was able to deploy a new Storage Class into my Kubernetes Cluster with the following yaml document:

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: vsan

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: csi.vsphere.vmware.com

parameters:

storagepolicyname: "vSAN Default Storage Policy"

fstype: ext4

As you can see, the custom Storage Class uses the csi.vsphere.vmware.com Storage Provisioner that comes with the Kubernetes vSphere Cloud Provider. You can also tweak your storage requirements through the attribute storagepolicyname, and provide your own Storage Policy that you have configured in your vSphere Cluster.

To check if the dynamic provisioning functionality of Kubernetes vSphere Cloud Provider works, you can deploy the following Persistent Volume Claim (PVC) in your Kubernetes Cluster:

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc1

annotations:

volume.beta.kubernetes.io/storage-class: vsan

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

After the deployment of this PVC, Kubernetes should create a 2 GB large VMDK file in your vSAN Datastore. After you have completed these steps, you are ready to deploy your first SQL Server 2019 Big Data Cluster on VMware vSphere!

The Deployment

Because you have deployed a custom Storage Class in your Kubernetes Cluster, you also have to customize the storage configuration of your Big Data Cluster. The deployment of a Big Data Cluster is described in JSON configuration file. The following configurations are currently available, which are described here in more detail:

- aks-dev-test

- aks-dev-test-ha

- kubeadm-dev-test

- kubeadm-prod

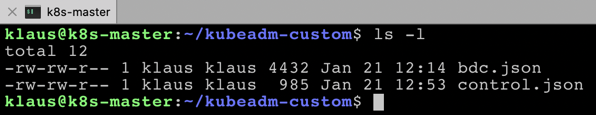

In my case, I have decided to deploy the kubeadm-dev-test configuration, and therefore we have to customize the storage settings in this configuration. In the first step you have to create a copy of the deployment profile with the following command line:

azdata bdc config init –source kubeadm-dev-test –target kubeadm-custom

This command exports the JSON configuration files into the folder kubeadm-custom:

You have to change the control.json file to set the Storage Class to the deployed Storage Class in your Kubernetes Cluster:

This is the only change that you have to apply. And finally, you can start the deployment of your SQL Server 2019 Big Data Cluster with the following command:

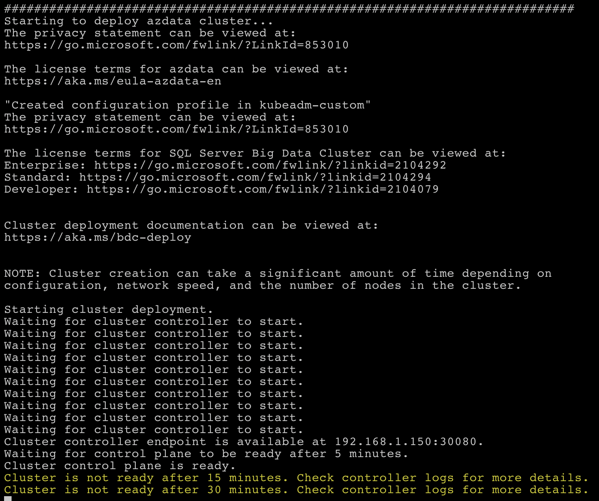

azdata bdc create --config-profile kubeadm-custom

And now it takes a lot of time until the deployment finishes, because you have to push all the necessary Docker Images from the Microsoft Container Registry. In my case, the whole deployment took around 1 hour…

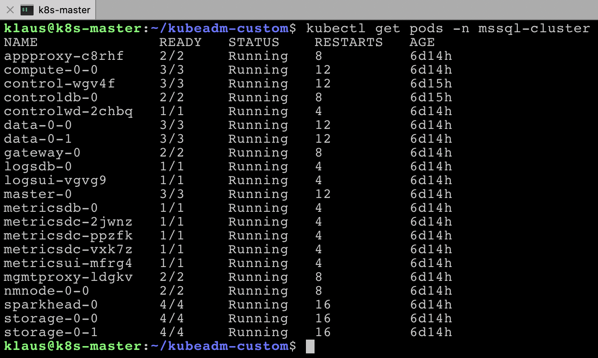

When the deployment is finished, you can check the status of the various deployed Kubernetes Pods with the following command:

kubectl get pods -n mssql-cluster

As you can see, there are 21 Kubernetes Pods, and in sum there are 45 Docker Containers which are running! As I have said initially: Big Data Clusters are a product within a product, and there are a lot of different things to explore…You can also check the status of your SQL Server 2019 Big Data Cluster with the azdata tool:

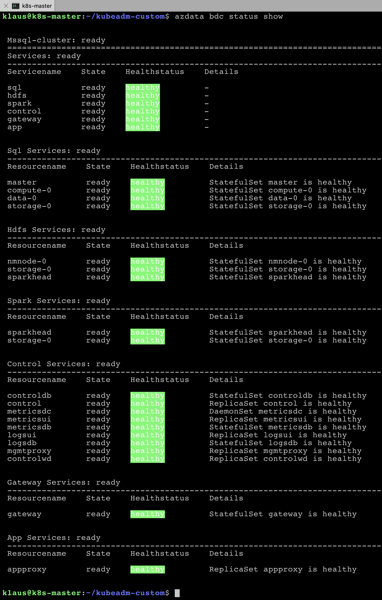

azdata bdc status show

If there are no problems, everything should be green:

Connecting to your SQL Server 2019 Big Data Cluster

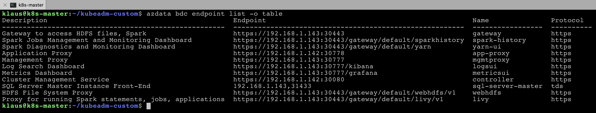

After you have successfully deployed your Big Data Cluster, the next logical step is to connect to your Big Data Cluster and work with it. A SQL Server 2019 Big Data Cluster provides you a so-called SQL Server Master Instance, which is your entry point into your Big Data Cluster. You can get the address of that Master Instance with the following command:

azdata bdc endpoint list -o table

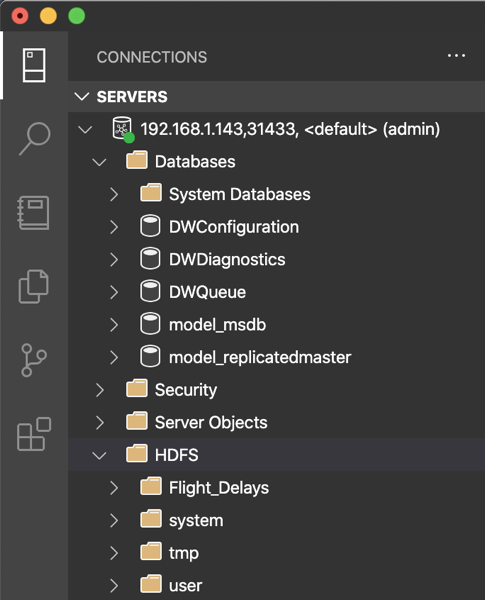

As you can see from this picture, there are also a lot of other endpoints available to access the HDFS File System, and the Spark system. I will cover these things in one of my next blog postings. My SQL Server Master Instance is accessible through the address 192.168.1.143,31433. So, let’s start Azure Data Studio, and connect to the SQL Server Master Instance.

As you can see, this is a traditional SQL Server Instance – but with the whole Big Data integration – the HDFS File System, and Spark.

Summary

Running a SQL Server 2019 Big Data Cluster locally in your own Data Center on top of VMware vSphere is not that hard – especially with VMware vSphere 6.7 Update 4 and the Kubernetes vSphere Cloud Provider.

I hope that you have enjoyed this blog posting, and over the next weeks I will show you how you can work with your Big Data Cluster, and what features it provides you – stay tuned

Thanks for your time,

-Klaus

1 thought on “Running a SQL Server 2019 Big Data Cluster on VMware vSphere”

Hello Klaus,

we deployed SQL Server BDC on Red Hat and when we restart primary database replica, primary SQL Server service does not come up.

Have you try above scenario?

Thank you in advance.