During the SQLRally conference in Copenhagen/Denmark, Brent Ozar (Blog, Twitter) and I had a very interesting conversation regarding Statistics on UNIQUEIDENTIFER columns. It seems that SQL Server shows some very nasty behavior here. Let’s have a look.

The repro

To show you the behavior we have complained about, I created a new database with the following simple table definition, where I enforced the primary key constraint on a UNIQUEIDENTIFER column. This means that SQL Server generates a unique Clustered Index in the background, and the Clustered Index itself has an underlying Statistics object that describes the data distribution in that column. Of course, the data distribution is linear, because every value in the UNIQUEIDENTIFIER column is by its nature unique.

-- Create a new table with a UNIQUEIDENTIFIER column as primary key. -- SQL Server will enforce the primary key constraint through unique clustered index in the background. CREATE TABLE CustomersTableGuid ( ID UNIQUEIDENTIFIER NOT NULL PRIMARY KEY, FirstName VARCHAR(50), LastName VARCHAR(50) ) GO

In the next step I have inserted 1 million rows into that table.

-- Insert 1 million records DECLARE @i INT = 0 WHILE (@i <= 1000000) BEGIN INSERT INTO CustomersTableGuid (ID, FirstName, LastName) VALUES ( NEWID(), 'FirstName' + CAST(@i AS VARCHAR), 'LastName' + CAST(@i AS VARCHAR) ) SET @i +=1 END GO

And now we update our statistics on that table with a FULLSCAN. A FULLSCAN means that SQL Server scans the complete underlying data to update the statistics object.

-- Let's update the Statistics with a FULLSCAN. UPDATE STATISTICS CustomersTableGuid WITH FULLSCAN GO

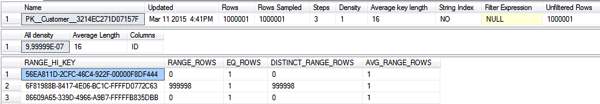

But when you look at the Statistics object now, you can see that SQL Server has only generated 3 steps in the Histogram.

As you can see in the header information, all 1 million rows were sampled during the Statistics update, but the Histogram shows us only 3 steps! But when you now update the Statistics object with a lower sampling interval, things are changing:

-- Let's update the Statistics with a smaller sampling interval. UPDATE STATISTICS CustomersTableGuid WITH SAMPLE 50 PERCENT GO

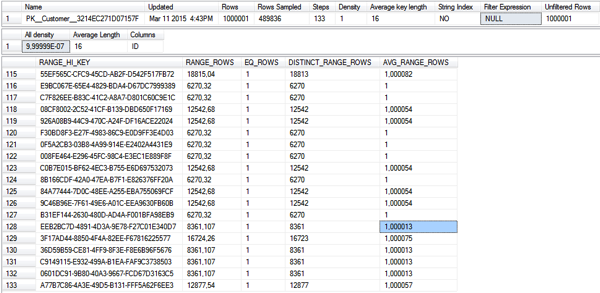

When you now look at the Histogram, you can see that we have a lot of different steps:

Summary

When Brent told me about this side-effect it made me smile. Bear this in mind when you work with UNIQUEIDENTIFIER columns in your database design. As long as the values are unique, you should not have a performance problem, because the row AVG_RANGE_ROW still makes the correct estimation of 1 row for the huge amount of gaps that you have in the Histogram.

Thanks for your time,

-Klaus

6 thoughts on “Statistics on UNIQUEIDENTIFIER columns”

Hello Klaus,

Thank you for this post. If you change the type of the ID column of the uniqueidentifier to bigint and set identity, have the same behavior. Ie. Three steps in the histogram. Ie. There is no difference in behavior between uniqueidentifier and bigint.

Hello Darko,

Thanks for your comments.

Ouch, that’s just crazy – I’ve never tried that with a BigInt data type ;-(

Cheers,

-Klaus

Yep, seen this in production.

Stop Clustering on GUIDS, because QO cant Cost joins properly using one line of stats…

Hello George,

Yeah, I’m also not really a big fan of GUIDs, but they are used very often in different databases (have you ever seen Dynamics CRM?).

Besides that, they *can* be interesting if you deal with the “Last Page Insert Latch Contention Problem”, because the GUID will spread your inserts across the *whole* leaf level of your Clustered Index.

Thanks,

-Klaus

I’m personally using GUIDs as PKs a lot. Ever wondered why anyone would do this? Here is the deal from developer’s point of view, the way Dynamics CRM had to deal with mapping documents from UI to the DB.

You can’t batch update a document *and* generate cross links between this new document and existing documents in the DB without generating the surrogate keys in the client application.

There it is, I said it.

You have a single ID in the header which goes as FK in the rows, then you have IDs in the rows which go FKs in the crosses for links to other documents in the DB.

The users are creating documents in the UI with multiple rows but do not persist these until everything is consistent from their point of view. They can hit ESC at any time and DB will not know anything about user’s intent — there is a point for the system being forgiving. So the business system is expected to batch update the whole document and generate links to other (existing) documents, and all this has been configured by the user in the UI beforehand.

We use Sequence objects to allow us to avoid guids and pre-create multiple related structures in the client/business layer and allow batch insert/updates.

Compact identifiers and safe pre-create.