In-Memory OLTP is all about In-Memory. But that’s only half the truth. In today’s blog posting I want to show you that even In-Memory OLTP can cause disk activity when you just read data from memory. The problem here is inaccurate statistics in combination with explicit sort operators in the execution plan.

The problem with the Sort Operator

As you might know, a sort operator needs a so-called Memory Grant for its execution. This memory area is used to perform the sorting of the incoming records in the execution plan. The size of this memory grant is based on the number of the estimated rows. During Cardinality Estimation the query optimizer estimates how many rows are expected by each operator in the execution plan.

Back in 2011 I wrote a blog posting that showed how a sort operator can spill over to TempDb when that estimate is wrong. And the same thing can happen in In-Memory OLTP: you have a sort operator in the execution plan, and when the estimated number of rows is wrong, the sort operator will spill over to TempDb! Let’s try to reproduce this scenario.

TempDb spills with In-Memory OLTP

Let’s create a new database which is configured with an In-Memory OLTP file group.

-- Create new database CREATE DATABASE InMemoryOLTP GO -- Add MEMORY_OPTIMIZED_DATA filegroup to the database. ALTER DATABASE InMemoryOLTP ADD FILEGROUP InMemoryOLTPFileGroup CONTAINS MEMORY_OPTIMIZED_DATA GO USE InMemoryOLTP GO -- Add a new file to the previously created file group ALTER DATABASE InMemoryOLTP ADD FILE ( NAME = N'InMemoryOLTPContainer', FILENAME = N'C:\Program Files\Microsoft SQL Server\MSSQL12.SQL2014\MSSQL\DATA\InMemoryOLTPContainer' ) TO FILEGROUP [InMemoryOLTPFileGroup] GO

In the next step I create a new memory optimized table.

-- Create a test table CREATE TABLE Table1 ( Column1 INT IDENTITY, Column2 INT CONSTRAINT pk_Column1 PRIMARY KEY NONCLUSTERED HASH (Column1) WITH (BUCKET_COUNT = 1) ) WITH ( MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_ONLY ) GO

As you can see from the table definition, I have created a hash index on the column Column1. And because it is a hash index, you also have to specify how many hash buckets you want to have in the underlying hash table. In my case I just have specified 1 hash bucket, which is very, very bad idea. With just one hash bucket you will get a huge amount of hash collisions when you insert records into that table. Normally you should have as many hash buckets as you have unique values in the column on which you have defined your hash index. The following code inserts 14001 records into the previously created table.

-- Insert 14001 records INSERT INTO Table1(Column2) VALUES (1) SELECT TOP 14000 IDENTITY(INT, 1, 1) AS n INTO #Nums FROM master.dbo.syscolumns sc1 INSERT INTO Table1 (Column2) SELECT 2 FROM #nums DROP TABLE #nums GO

You can check the number of hash collisions through the DMV (Dynamic Management View) sys.dm_db_xtp_hash_index_stats. As you can see from the output of this DMV, you have 140001 records in the one and only hash bucket of this hash index. And now let’s run a SELECT statement that includes an explicit sort operator in the execution plan.

-- The sort operator in this execution plan spills over to TempDb! SELECT * FROM Table1 ORDER BY Column1

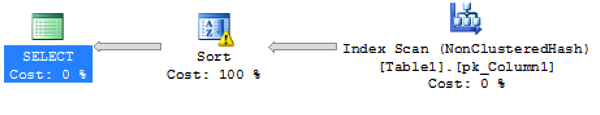

When you now look at the execution, you can see that the sort operator is spilled over to TempDb.

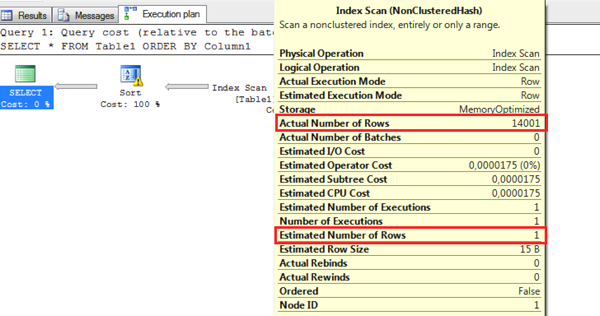

This happens because of the inaccurate statistics on the hash index. When you look at the Index Scan (NonClusteredHash) operator in the execution plan, you can see that the Query Optimizer just expected one row from our hash index. In reality we returned 140001 rows.

The estimation of the Index Scan (NonClusteredHash) operator is always based on the number of hash buckets in the underlying hash index. The assumption that the Query Optimizer makes here is that you have no hash collisions – which in our case is not correct. Therefore the memory grant for the sort operator is dimensioned according to that incorrect estimate, and has to spill over to TempDb. On my system this query takes around 80ms , which is a very long time for an In-Memory technology.

How can you fix that problem? Drop your table, and carefully plan the number of hash buckets in your hash index. Welcome to the wonderful world of In-Memory OLTP…

Summary

When you use hash indexes with In-Memory OLTP you have to carefully design your hash bucket count for your hash indexes. When they are wrong, the performance will suffer. I also already blogged more than a year ago, about how hash collisions will decrease the performance of In-Memory OLTP – even with no TempDb spills!

The moral of the story: with hash collisions in your hash indexes you can’t expect an amazingly fast performance with In-Memory OLTP, because they introduce a huge overhead and also affect the cardinality estimation.

Thanks for your time,

-Klaus