In todays blog posting I want to concentrate in more details on the Updateable Clustered ColumnStore Index that is introduced with SQL Server 2014. Before we go down to the details, I want to give you a brief overview about their first appearance in SQL Server 2012, and their limitations.

ColumnStore Indexes in SQL Server 2012

The introduction of ColumnStore Indexes in SQL Server 2012 was one of the hottest new features (besides AlwaysOn). If chosen right, they can make an impressive performance improvement for Data Warehousing workloads. Unfortunately, they had 2 big limitations:

- There was only the support for Non-Clustered ColumnStore Indexes

- As soon as you had created a ColumnStore Index on a table, the underlying table was read only, and no changes to the data were allowed anymore

Both limitations were a huge bummer for some customers. Imagine you had a table with 300 GB of traditional row store data. With a ColumnStore Index it’s possible to compress that data down to a size of 30 GB. But SQL Server 2012 only allowed a Non-Clustered ColumnStore Index, means you had to store your data twice: one in the traditional row store format, and once in the new ColumnStore format. That’s a huge waste of storage, because your queries will (hopefully) only use your Non-Clustered ColumnStore Index.

And as soon as you had created your Non-Clustered ColumnStore Index, you were not allowed to change the underlying table data anymore – your table was just read only! Of course, there were some workarounds for this problem, like Partition Switching, but you still needed to implement that on your own…

ColumnStore Indexes in SQL Server 2014

Things are changing now with SQL Server 2014, because Microsoft has resolved the above mentioned issues – with some magic and illusion: SQL Server 2014 provides you an Updateable Clustered ColumnStore Index! Let’s have a more detailed look on how this magic and illusion happens internally in SQL Server.

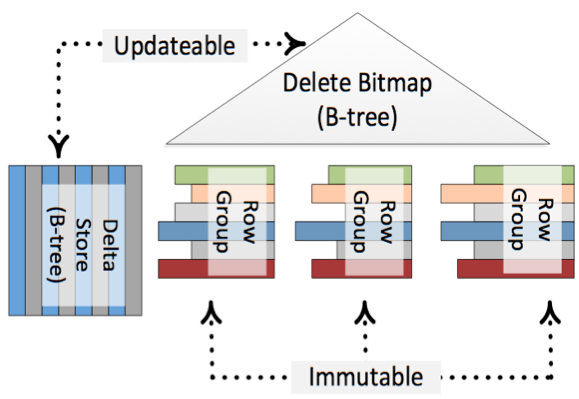

The first most important fact is that an underlying direct update of a ColumnStore Index is not possible! It would be too time consuming to do the complete decompress and compress on the fly during your INSERT, UPDATE, and DELETE transactions. Therefore SQL Server 2014 uses help from some magic: Delta Stores and Delete Bitmaps. Let’s have a more detailed look on both concepts.

Every time when you run an INSERT statement, the new record isn’t directly inserted into the ColumnStore Index – the record is inserted into a Delta Store. The Delta Store itself is nothing else than a traditional B-Tree structure with all its pro’s and con’s. When you afterwards read from the ColumnStore Index, SQL Server returns you the data from the compressed ColumnStore Index AND also from the Delta Store.

When you run an DELETE statement, again nothing happens in the compressed ColumnStore Index. The only thing that happens is that the record is deleted logically through a Delete Bitmap. Every record in the ColumnStore Index has a corresponding bit in that Delete Bitmap. When you again read your ColumnStore Index, SQL Server just discards the rows that are marked as deleted in the Delete Bitmap.

And running an UPDATE statement just means inserting the new version into the Delta Store, and marking the old version as deleted in the Delete Bitmap. Easy, isn’t it? The following picture (source http://research.microsoft.com/apps/pubs/default.aspx?id=193599) shows this concept in more details.

Because of the Delta Stores and the Delete Bitmap it seems that your ColumnStore Index is updateable, but in reality it is just immutable. There is also a background process called the Tuple Mover, which runs regularly and finally pushes your changes asynchronously into the compressed ColumnStore Index.

In addition you can also finally define a ColumnStore Index in SQL Server 2014 as Clustered. This means that you don’t need to have your data in the traditional row store format anymore. Just create your table and create a Clustered ColumnStore Index on it. You can some with this approach huge space savings in your storage, because everything is now compressed. When you work in more details with the concepts of relational databases, Clustered always means Sorted. But with a Clustered ColumnStore Index this statement is not true: when you create a Clustered ColumnStore Index, there is NO sorting order in your data! Just be aware of this tiny little fact 😉

Summary

Updateable Clustered ColumnStore Indexes are a nice magical illusion of SQL Server 2014. Don’t get me wrong: I really like the possibilities introduced with this new feature, but you have to understand how the feature is implemented internally, if you want to make the best use of it.

Thanks for reading

-Klaus

8 thoughts on “The Illusion of Updateable Clustered ColumnStore Indexes”

Hi Klaus, Thanks for the tip at the end about sorting in the clustered column store index, and how changes to the clustered indexes are physically stored.

From initial testing changes to the table seems a bit slower than a table that doesn’t have a column store index. Have you also experienced it? Be that as it may, it is still a fantastic feature!

Regards,

Heinrich

Another limitation with clustered column store index in 2014 is you can’t access the Clustered column store table via link server, where as in SQL 2012 non clustered column store index we can access table via link server.

When there is a Delete operation, if the row is in DeltaStore and not merged with the ColumnStore then the DB engine directly deletes the row Physically. But if the merge has happened and the row is in ColumnStore (not in DeltaStore) then the row is just Logically Deleted by makring an entry in Delete Bitmap, and an Index Rebuild operation is required to remove the row Physically.

Regarding your comment about inserts going into delta stores with SQL Server 2014, the following excerpt comes from the Microsoft research white paper “Enhancements to column store indexes” ( section 4.2 ):

Large bulk insert operations do not insert rows into delta stores but

convert batches of rows directly into columnar format

Hello Chris,

Thanks for your comment.

Yes, bulk insert operations are going directly into the ColumnStore format.

Thanks

-Klaus

Hi,

A late comment on this article:

With both BULK- and any other INSERT to a Clustered Columnstore Index, there is a threshold of 102.400 rows. If the INSERT is smaller than 102.400 rows, the rows till go into the delta store. If the INSERT is 102.400 rows or larger, the rows will go directly into a new compressed rowgroup.

The “perfect” number of rows for each insert is 1.048.576 rows, which is the maximum number of rows for a compressed rowgroup.

Hi Klaus, thank you for this insightful article. It made me understand why my TSQL MERGE statements were the longest running processes in my ETL. Having said that, when I take it into perspective the Merge only consumed about 14% of the total duration. So all in all, the Insert and Updates on Clustered Columnstore Indexes are pretty quick.

Regards,

Louw

Hello Louw,

Thanks for your comment.

Good to know that my blog posting helped you.

Thanks,

-Klaus