Today I want to talk about a very specific topic in SQL Server – Instant File Initialization. If you have Instant File Initialization for your SQL Server Instance enabled, you can have a tremendous performance improvement – under specific circumstances. Instant File Initialization defines how the SQL Server engine interacts with the Windows OS, when allocating new space in data files.

The Problem

When you are allocating new space in a data file in the default configuration of SQL Server, SQL Server has to call internally Win32 API functions that are zero-initializing the new allocated NTFS clusters. This mean that every byte of new allocated space is overwritten with zero values (0x0). This behavior prevents the problem of accessing old data, that was previously stored physically in the same NTFS clusters. The zero-initialization takes place during the following SQL Server operations:

- Creating a new database

- Auto growing a database

- Restore of a database backup

When you are creating a database file with 50 GB, SQL Server has to initialize in the first step that new block of data with 50 GB of zero values. And this can take a lot of time. Let’s have a look on the following CREATE DATABASE statement.

-- Create a new 50 GB database CREATE DATABASE TestDatabase ON PRIMARY ( NAME = N'TestDatabase', FILENAME = N'G:\SQL\DATA\TestDatabase.mdf' , SIZE = 51200000KB , FILEGROWTH = 1024KB ) LOG ON ( NAME = N'TestDatabase_log', FILENAME = N'G:\SQL\Log\TestDatabase_log.ldf' , SIZE = 1024KB , FILEGROWTH = 10% ) GO

As you can see from the code, I’m creating here a database file of 50 GB. In my default SQL Server configuration this statement takes around 16 seconds, because SQL Server writes through a Win32 API function 50 GB of zeros to the storage. Imagine what happens if you have a corrupt database (e.g. 50 GB of size), and you want to restore a backup? What people are normally doing in the first step, is to delete the corrupted database. This means that the database files are gone, and SQL Server has to recreate in the first step during the restore operation the files:

- SQL Server creates in a first step an “empty” database of 50 GB, where the data file will be zero-initialized in the NTFS file system

- As the final step the backup is restored, and SQL Server writes again 50 GB of data into the data files

As you can see, you are writing with this approach 100 GB of data to your storage! If you are just restoring your backup *over* the existing files, SQL Server can skip the first step, and just writes 50 GB of data to your storage – you have achieved an performance improvement of 100%!

Instant File Initialization

If you don’t want that SQL Server is doing the zero-initialization of your data files, you can reconfigure SQL Server. If you grant the service account, under which SQL Server is running – the privilege Performance Volume Maintenance Task, SQL Server will skip the zero-initialization of the data files, if you have restarted SQL Server afterwards. As I have said this only applies to data files – log files are ALWAYS zero-initialized in SQL Server! There is NO WAY around that!!! Without the zero-initialization of the log file, the crash recovery process would have no idea where to stop, when the log file was wrapped around. Crash Recovery stops where it finds zero values in the header of the next log record to be processed.

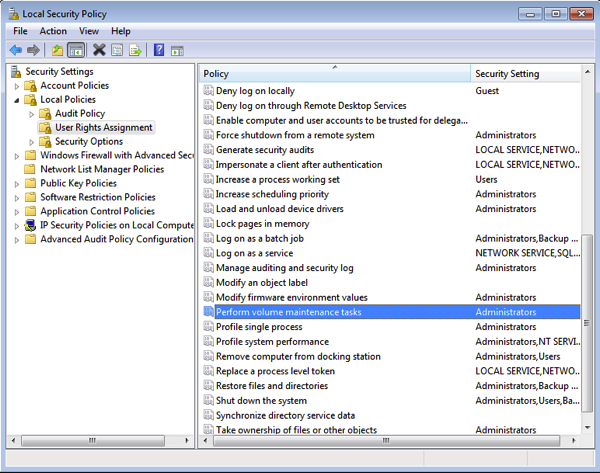

You can grant the permission Performance Volume Maintenance Task through secpol.msc to the service account of SQL Server.

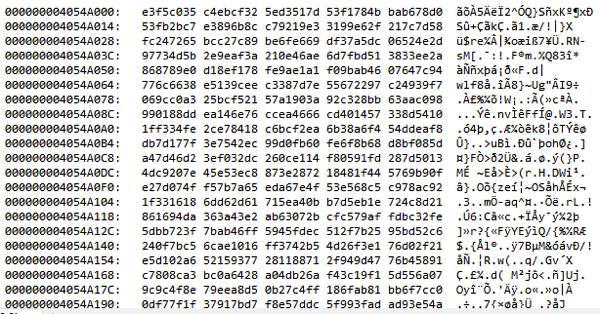

After a restart, SQL Server is now able to skip the zero-initialization of data files. When I’m running the CREATE DATABASE statement from about again, it takes around 250ms – that’s a huge difference! The side-effect? You are able to retrieve the old content that was stored in the allocated NTFS clusters through the DBCC PAGE command:

-- Enable DBCC trace flag 3604 DBCC TRACEON(3604) GO -- Dump out a page somewhere in the data file -- A hex dump is working here DBCC PAGE (TestDatabase, 1, 1000, 2) GO

As you can see I’m just dumping out a page somewhere in my data file. In that case, it can now happen that SQL Server just returns you some garbage data – data that was previously stored in the new allocated NTFS clusters – data that has no relevance to SQL Server:

By granting this permission to SQL Server, you are mainly opening a security hole: users (with the right permissions) are able to retrieve old data, that was previously stored in the file system. So you have to think very carefully about that, if you grant the permission to SQL Server, or not.

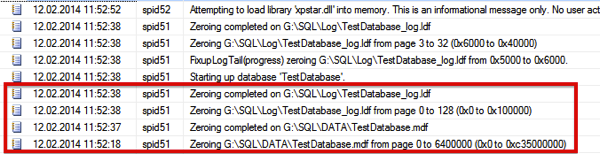

If you want to know, if your SQL Server is running with this permission, or not, you can enable the trace flags 3004 and 3605. With these trace flags enabled, SQL Server reports in the error log which files are zero initialized. When you afterwards create a new database, and the permission wasn’t granted to SQL Server, you can see from the error log, that data AND log files were zero-initialized:

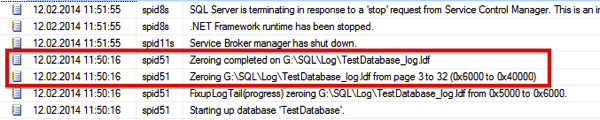

If SQL Server has the permission Perform Volume Maintenance Task, you can see from the error log, that ONLY the log file was zero-initialized:

The Windows Internals

But what happens now under hood in the Windows OS, when you grant the permission Perform Volume Maintenance Task to the service account, under which SQL Server is running? With this permission enabled (it’s internally called SE_MANAGE_VOLUME_NAME by the Win32 API), SQL Server is able to call the Win32 API function SetFileValidData. As you can see from the documentation, the process who is calling that function, has to have the permission SE_MANAGE_VOLUME_NAME. When that function is called by SQL Server, the function itself just sets the so-called High Watermark of the file – the file is just expanded WITHOUT overwriting the old content in the underlying NFTS clusters! As the documentation says:

“The SetFileValidData function allows you to avoid filling data with zeros when writing nonsequentially to a file. The function makes the data in the file valid without writing to the file. As a result, although some performance gain may be realized, existing data on disk from previously existing files can inadvertently become available to unintended readers.”

…

“If SetFileValidData is used on a file, the potential performance gain is obtained by not filling the allocated clusters for the file with zeros. Therefore, reading from the file will return whatever the allocated clusters contain, potentially content from other users. This is not necessarily a security issue at this point, because the caller needs to have SE_MANAGE_VOLUME_NAME privilege for SetFileValidData to succeed, and all data on disk can be read by such users.”

As I have said earlier, it’s mainly a security concern if you are enabling that specific permission for your SQL Server instance, or not.

Summary

Should you now enable Instant File Initialization for your SQL Server instance, or not? It dep… When you are the SQL Server AND Windows administrator, it’s a good idea to grant that permission, because as a Windows admin, you always have access to the file system. But when you have dedicated Windows and SQL Server admins, it can be the case, that the Windows admin doesn’t trust you, and that you are not getting that permission for your SQL Server instance. In that case SQL Server will always zero-initialize the data and log files…

Thanks for reading

-Klaus