Every index in SQL Server can improve your query performance, if the Query Optimizer chooses the index in the execution plan for data retrieval. But on the other hand every index will also hurt your performance, because the index has to be maintained during INSERT, UPDATE, and DELETE operations. Therefore it is very important to create as few indexes as possible for your workload – otherwise you will have huge performance problems during write operations.

During my SQL Server Performance Tuning Workshop in London earlier this month I talked about this specific problem. One question was how SQL Server reacts when you have defined the maximum of 999 Non-Clustered Indexes on a table. I have never ever created as many as 999 indexes on a table, therefore it was a good exercise to verify this behavior in more detail, and talk about all the various side-effects that are introduced.

Let’s create a terrible table!

The following code shows you how to create a really terrible table: I’m just creating 999 times the same Non-Clustered Index – over and over again.

-- Create a simple table CREATE TABLE PerformanceKiller ( Col1 INT IDENTITY(1, 1) PRIMARY KEY NOT NULL, Col2 INT NOT NULL, Col3 CHAR(100) NOT NULL ) GO -- Create 999 Non-Clustered Indexes... CREATE INDEX idx_StupidIndex1 ON PerformanceKiller(Col2) CREATE INDEX idx_StupidIndex2 ON PerformanceKiller(Col2) CREATE INDEX idx_StupidIndex3 ON PerformanceKiller(Col2) CREATE INDEX idx_StupidIndex4 ON PerformanceKiller(Col2) ... CREATE INDEX idx_StupidIndex997 ON PerformanceKiller(Col2) CREATE INDEX idx_StupidIndex998 ON PerformanceKiller(Col2) CREATE INDEX idx_StupidIndex999 ON PerformanceKiller(Col2) GO

This behavior of SQL Server is already crazy: SQL Server doesn’t complain in any way if you create the *same* Non-Clustered Index over and over again. You only have to make sure that the chosen index name is unique…

999 is also the maximum number of Non-Clustered Indexes for a table in SQL Server. If you try to create one additional Non-Clustered Index, SQL Server will return an error message:

Msg 1910, Level 16, State 1, Line 1060

Could not create nonclustered index ‘idx_StupidIndex1000’ because it exceeds the maximum of 999 allowed per table or view.

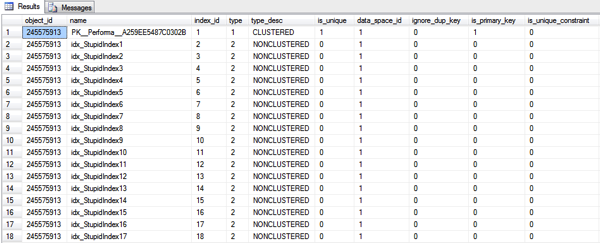

When you look now into sys.indexes for the table, you can see 1000 records: one record for the Clustered Index, and 999 records for the individual Non-Clustered Indexes…

After the creation of all these indexes, I then inserted 100000 rows into that table. Now let’s run a few specific SQL statements against that table, to change the data. First of all, let’s update 50 records with a simple UPDATE statement.

-- Let's perform a "simple" UPDATE statement against 50 rows... BEGIN TRANSACTION UPDATE PerformanceKiller SET Col2 = Col2 + 1 WHERE Col2 BETWEEN 100 AND 150 ROLLBACK GO

Ouch, that statement was really slow! On my system it took around 5 seconds! Imagine that! 200 milliseconds to change 50 rows. But in reality you haven’t only changed 50 rows: for every row you had to change the corresponding index record in the Clustered/Non-Clustered Indexes! In our case we have 1000 indexes defined on that table, and therefore we had to perform 50000 index updates! (1000 x 50). And that SQL statement generated more than 200000 logical reads! Let’s perform a simple DELETE statement against 100 rows in a transaction without committing it:

-- Let's perform a "simple" DELETE statement against 100 rows...

BEGIN TRANSACTION

DELETE FROM PerformanceKiller

WHERE Col2 BETWEEN 200 AND 300

SELECT

database_transaction_log_record_count,

database_transaction_log_bytes_used

FROM sys.dm_tran_database_transactions

WHERE database_id = DB_ID('TooMuchIndexes')

ROLLBACK

GO

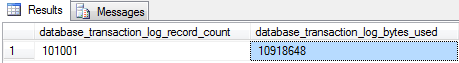

Again, you have to perform 100000 index deletes! (1000 x 100). And SQL Server has to log everything in the Transaction Log. If you look into the DMV sys.dm_tran_database_transactions, you can see that we need 10 megabytes for the current, ongoing transaction in the Transaction Log. This is a huge overhead that you have created here.

And now imagine that you have to rollback this transaction. On my system the rollback of this transaction took about 500 milliseconds! So it would take a much longer time to bring the database back into a consistent state.

Summary

It doesn’t matter what you are doing with a table that consists of 999 Non-Clustered Indexes: everything will just be slow! As I have said at the beginning of this blog posting, you should create the fewest possible Non-Clustered Indexes appropriate for your workload. The fewer indexes you have, the less overhead you create for your write workload.

Please feel free to leave a comment on how many indexes you may have previously seen on tables.

Thanks for your time,

-Klaus

2 thoughts on “999 Non-Clustered Indexes on a Table – a good idea?”

Hi Klaus

Nice article, good reading as usual…

To add to the list of overhead and side-effects, from the top of my head:

1. impact on the index maintenance processes. If this would be one of the largest tables in the system, it may very well never reach a state where all the indexes are defraged, stats the same.

2. assuming that with this number of non-clustered indexes one would get a missing index warning for this tabl, another side effect would be handling these missing indexes.

3. what if the clustering key is improper and you have to change it (imagine a system where a DBA would have inherited it)?

And there may be a good bunch of other ones.

Hello Alin,

Thanks for your comment.

I don’t want to think about all of these other side-effects… 😉

-Klaus